Stop generating, start thinking: Arguments against letting Claude Code et al just blindly do your whole job for you.

Recently I read:

Write about the future you want: “If complaining worked, we would have won the culture war already.”

The enshittification of American hegemony: Countries as platforms.

Since Elon Musk acquired Twitter in a tumultuous $44 billion deal completed last October, the social network has turned down very few requests for content restriction or censorship from countries like Turkey and India, which have recently passed laws limiting freedom of speech and the press.

Despite describing himself as a “free speech absolutist”, El Pais reports that since Elon bought Twitter the rate of the company agreeing to government requests to remove tweets has jumped from 50% to 83%.

His “free speech” bona fides were obviously never true, but the numbers are interesting.

I was sad to hear that The Nib, the “publisher of political cartoons and nonfiction comics about what is going down in the world”, is to shut down.

…there’s no one factor involved. Rather it involves, well, everything. The rising costs of paper and postage, the changing landscape of social media, subscription exhaustion, inflation, and the simple difficulty of keeping a small independent publishing project alive with relatively few resources—though we did a lot with them. The math isn’t working anymore.

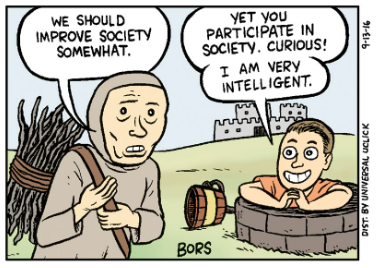

You may not know the name but if you’ve been on the Internet for more that a few minutes then you may have come across at least parts of its more meme-able output.

Here’s the much circulated final panel from one of my favourite strips - now oft-utilised as a frustratingly evergreen meme -Mister Gotcha.

I’ve never actually seen the physical edition, but the final issue is on sale now.

Naomi Kritzer, author of Cat Pictures Please et al, produces an annual list of recommended gifts to give if you want to annoy the recipient.

It’s very good, and very passive-aggressive. It wouldn’t be at all obvious to the recipient that you overtly hated them in most cases. But you know the truth.

Meta has received the largest fine for a GDPR breach ever seen - £1 billion - for the way it’s been handling European users' data.

The DPC ruled that transferring European users’ Facebook data to the US created “risks to the fundamental rights and freedoms” of citizens in light of the widespread domestic spying uncovered by whistleblower Edward Snowden.

Considering that the monopoly that is Facebook is prone to covertly assembling a dossier of your online behaviour even whilst you’re not using it - even if you never signed up to it - what they do with your data is a fairly critical issue.

📺 Watched Chernobyl.

The catastrophic disaster that followed after the Chernobyl nuclear power station melted down and exploded in 1986 is something that I feel like I’ve “always” known of but with with very little grasp of the what and why behind the event. Even more than 3 decades later it seems to remain within the public consciousness to some extent, occasionally deterring people from embracing the idea of nuclear power plants, frustrating those who feel like nuclear is an essential part of the climate change solution.

On the other hand, these days the site appears to have become a common enough stop for “disaster tourists”, with social media influencers chasing the clicks. There’s a Chernobyl tourist information centre that sells “T-shirts, hot-dogs, fridge magnets, gas masks and, if you so choose, a full nuclear fallout suit”.

In any case, this TV show won many awards, and basically universal acclaim from everyone I personally know who watched it. I don’t think it was initially available to watch the UK but if you have a Now TV subscription it’s been there a while. And I certainly found it worth watching, now feeling like I have a much better grasp on the circumstances that surrounded the catastrophe and the context within which it occurred. This is no bland documentary though, the drama is as gripping and compulsive as in any other show I’ve seen in recent times. There are some upsetting scenes, as you might imagine.

Of course a risk in these dramatization of real events is that they do not in fact reflect the real event in question all that much. Witness the allegations against The Crown for instance. But this one I understand was at least largely driven by fact.

Plenty of liberties and editorial decisions were of course taken; representing a whole segment of people with an individual, making court case testimony a lot more dramatic and dynamic than it actually was, that kind of thing. The nature of the event is also such that the full and unvarnished truth may in any case never be fully known by anyone outside of those who were involved.

But something I really appreciated and I wish every such “dramatisation of a real event” show would do was the release of an official accompanying podcast that explained where each episode diverged from reality and why those decisions were made.

📚 Finished reading Cat Pictures Please and Better Living Through Algorithms by Naomi Kritzer.

These are a couple of surprisingly wholesome sci-fi short stories. The former has won plenty of awards, including a Hugo award for best short story in 2016.

In the former, the AI behind a search engine becomes sentient - but doesn’t feel compelled to destroy humanity.

In the latter, a new social media app launches - but doesn’t turn into a destructive hellsite on day one.

So some might say they’re perhaps a little far-fetched. But it’s a refreshing change from the relentless dystopia associated with near-future technology either in fiction or reality. And who knows, maybe it’ll help inspire a bit of IRL thinking about how technology could in fact work for the betterment of our lives.

Reading them also reminded me that short-story magazines exist. Both have been published in “Clarkesworld”, a monthly sci fi and fantasy magazine I’m now tempted to subscribe to.

📺 Watched Shadow and Bone season 2.

Given at the end of the last season our heroes were under the impression they’d destroyed the big bad Darkling last season I suppose they thought they’d be in for an easy time in the second one. Sorry for the spoiler, but of course that’s not exactly how things turn out. Plenty of fighting, wizards and young-adult-fictiony angst to go around (unsurprising given it’s based on a YA fantasy novel of the same name).

The rise of private GP practices in the UK

Following years of Conservative government mismanagement and un-resourcing of the rightly-venerated National Health Service, the UK is seeing a dramatic rise in the number and usage rates of private GP practices.

From The Guardian:

Patients are paying up to £550 an hour to see private GPs amid frustration at the delays many face getting an appointment with an NHS family doctor.

Whilst the NHS has always had unfortunate exceptions, flaws and inequities in provision it now feels more fragmented than ever before to me. Probably the most critical issue it that it appears to have become essentially unbearable to work in much of the healthcare system.

Resignation rates skyrocket as the workplace conditions become increasingly horrendous, incompatible with living a reasonable life. From those who remain, we lose hundreds of thousands of working days per month to mental health related NHS staff absences. Pay dwindles in comparison to comparable opportunities. Recruitment rates are far too low to make up for any of this, even if one could safely trade an experienced professional for a fresh graduate, the pipeline of which now in any case risks being held back due to the lack of experienced staff available to supervise on-the-job-training. And then the reliance until now upon foreign-born healthcare workers has come back to bite the country whilst on one hand we bemoan the lack of staff in healthcare and beyond and on the other hand create a deliberately hostile environment for anyone who could possibly be construed as “not British”.

The end result is over 130,000 open NHS vacancies.

Amongst other issues, this leads to frequent challenges for members of the public in accessing the skeleton-staffed services that remain. This has now got to the state where fully 1 in 8 Britons have paid for private health services in the last year. 27% of Britons considered doing so but ended up not doing so, in many cases because it’s expensive. Few people can realistically afford the more than £500 per GP appointment figure that we started off with above.

So all in all, 40% of the population has at least had the thought that they may have to turn to private healthcare in a country previously famed for its visionary universal healthcare system. The vision - admittedly never fully realised - was a service “free at the point of delivery”, such that we’d never have to individually ponder to what extent we should trade off our health against our wealth.

A few sites that offer blog hosting

Something of a return to blogging seems to be in the air, at least in some circles. Whether inspired by the semi-destruction of Twitter, an increased awareness of the bad incentives and surveillance capitalism that are infused within the places where the world previously moved the bulk of its personal posting to in the past - the big social networks - or part of a vaguely-defined vibe shift, it feels positive to me.

But how to start a blog can be a bit less immediately obvious and accessible than signing up to Facebook is. First up, there’s only one Facebook, one Instagram and so on - which is part of the problem - whereas you can host the same blog in many different places. That even includes on your own computer in your own house, although that’s not to be recommended for most people! After all, a traditional blog is really just a website that displays pages in a certain format, so most places where you can write on the web you can blog. However for anyone who doesn’t need to be too “experimental”, particularly if they’re not super familiar with web hosting or coding and prefer to focus on their post content, it’s probably easiest to start off with a third-party host that is specifically designed to host blogs.

Below is list of the ones I’ve come across. I’ll also add some thoughts as to what went through my mind when it came to choosing one myself. That said, one shouldn’t let the perfect be the enemy of the good. If you do not want to spend your time reviewing the options, just pick one and start writing. Honestly if you were to just pick one at random you’d probably end up somewhere that you have far more control over and access to more useful features than the mainstream social networks.

For the uninitiated who are in no mood to spend time exploring:

- Wordpress.com might be a reasonable default for traditional-style blogs because:

- It’s extremely widely used by individuals and big organisations for all sorts of sites. This means it’s generally reliable, plus widespread enough that if it doesn’t suit you then it’s a format that some other blog hosts can import from which can make switching to another site later easier.

- There’s a (permanently) free option if you don’t mind them adding advertising to your posts. This might be especially good if you just want to see whether the blogging is something you are going to enjoy before spending time or money setting up something fancier,

- Wordpress probably can be cajoled to do almost anything in theory, although it might be complicated and/or expensive to fully customise.

- For something more akin to the social media posting experience - short posts with no titles, single photos etc. then if you’re open to paying then micro.blog is one I use that’s more aimed at this style of usage - although it’s perfectly possible to write traditional long posts too; see for example the one you’re currently reading! It does cost a subscription, but there’s a free trial if you want to try it out (and a summer sale on at the time of writing). Tumblr offers another easy-to-use take on microblogging that has a ton in common with the bigger social media sites, for better or worse, including free options. But it comes at the cost of having less control over your site.

However if you have any interest in looking around then I’d certainly recommend doing so to see if which of the options feel most in line with the ethos of what you want.

As noted, you can build a blog with almost anything web-orientated. A text editor and some web hosting works. But to keep the list manageable, I’ve only included those that are ready-to-use cloud services with functions tailored to blogging. As far as I know you can just sign up to use any of them and write a basic blog post that the whole internet-enabled-world can see with minimal effort or technical skill required.

This means I’ve excluded some big categories like static site generators you’d run on your own computer or anything involving setting up self-hosting. These have a good following but are a perhaps more effort than I’d guess the average person new to blogging wants to deal with. But they certainly have advantages if you’re minded to survey all options.

Now the list. I’ll include the tagline for each one in case that helps understand who they’re targeting. To be clear, I’m just pasting their tagline in, not my opinion.

First, the three I currently personally use for various things. Obviously this means I like them enough.

- micro.blog: “Personal blogging that makes it easy to be social. Post short thoughts or long essays, share photos, all on your own blog.”

- Wordpress: “Welcome to the world’s most popular website builder” (I’ve linked to wordpress.com but there are many other sites also provide Wordpress.)

- omg.lol: “Get the best internet address that you’ve ever had” (this one’s blog feature is actually in beta so it’s not even advertised as a feature, but there is one.)

Now a few others that are primarily focussed on blogging that I feel like I’ve heard at least some positive feedback about.

- Bear Blog: “A privacy-first, no-nonsense, super-fast blogging platform”

- Blogger: “Publish your passions, your way.”

- Blot.im: “Blot turns a folder into a website.”

- Ghost: “Turn your audience into a business.”

- Medium: “Publish, grow, and earn, all in one place.”

- Tumblr: “It’s time to try Tumblr. You’ll never be bored again.”

- Write.as: “Type words, put them on the internet.”

These ones I’ve heard of but know nothing whatsoever about:

- Blogstatic: “The simplest, most powerful way to create your new great–looking blog!”

- Postach.io: “The easiest way to blog. Turn an Evernote notebook into a beautiful blog or web site.”

- Silvrback: “The best at simple blogging.”

- Superblog: “Stop worrying about speed, SEO, and servers. Superblog is a blazing fast alternative to WordPress and Medium blogs.”

- Svbtle: “A publishing platform.”

The next few I understand to be more general “make it easy to build any kind of website easily” offerings. To be fair, Wordpress is really that these days. But they do have dedicated templates or features for bloggers so I’ve included them.

- Squarespace: “Everything to sell anything.”

- Weebly : “Websites, eCommerce & Marketing in one place. So you can focus on what you love.”

- Wix: “Create a website without limits.”

Other categories you might consider include sites targeting newsletter creation, Substack being the obvious one. Although it’s very much email newsletter focused, people read your newsletters on the web, within a Substack app or, to some extent, via RSS readers, which makes it bloggy. But it’s set up to prioritise the newsletter side of things so unless that’s your focus it might be of less interest.

Things you might consider

Here’s a non-exhaustive list of things that went through my mind when thinking about blogging platform choice. Honestly there’s a lot here that you don’t really need to care about at all in order to just start blogging, particularly if it’s a personal blog.

Plus if you do overlook something you later want to change, well, part of the joy of blogging is that you’re almost never locked into anything. Change it later! Some people appear to enjoy changing their blog hosting every few months.

So certainly don’t let not being up for reading incredible amounts of rambling about tiny decisions put you off getting started right away. It’s important to remember that for a lot of people the alternative we’re comparing blogging to is posting on social media where you have essentially no control over anything.

But in case like me you enjoy dramatically overthinking everything:

How do you want write your posts?

- Likely all blog hosts have some kind of web editor you can use. But how exactly you write your posts might differ.

- Some use “block editors” where you construct your posts out of a series of blocks for various types of content. For example maybe your post has a paragraph of text block, then an image block, and then another paragraph of text block that you can move around as you wish.

- Others are more like typical word processors. You just write your document and embed what you want within it in the same way as Google docs works.

- Is it a WYSIWYG interface? If not, is there some way to preview what the post looks like pre-publishing?

- How do you format text, define headings, add other media etc? Maybe you use menu items or keyboard shortcuts, again like Google Docs' default. Would you prefer to write in markdown? Can you edit the HTML directly? Sidenote: markdown is fairly easy to learn and after I did so I found I it invaluable to use in all sorts of places unrelated to blogging.

- If you prefer to write on the go, is the web editor easy to use on a phone? Does the provider have its own app you can write in?

- If you already have favourite writing apps, can they publish directly to your blog? For example, iaWriter can publish directly to several blog hosts. Obsidian has addins that can do things like publish to micro.blog. This is more likely if it’s one of the big hosts or they use some API or protocol that’s common. Although there’s no need to over-complicate things, copy and paste usually works fine.

- Can you edit and update your posts? Almost certainly the answer here will be yes.

- Do you want to be able to schedule posts to be published in the future, or to edit the post dates for other reasons?

How do you want your blog to look and work? How much technical ability do you want to expend to make it so?

- How much do you want to be able to customise your blog? It may be very little if your focus entirely on your writing or other content. After all most people are happy enough posting on social media where you basically have zero control over what it looks like. But it might also be a whole lot if you have a particular design in mind, especially one that’s something other than the norm.

- Do you like the default format of the blog enough? That way you can just begin posting right away.

- Are there different pre-made themes you can easily apply to your blog? Do you like any of them?

- What elements of the design can you customise beyond any preset themes?

- How do you make these customisations? e.g. is it a case of point-and-click, filling in forms, selecting from dropdowns etc. or do you need to know some kind of code?

- If you do know or want to learn CSS or HTML code, are you able to add that kind of custom code to your blog? It’s likely the only way to get full control over its appearance, but it’s also something that many people may have no interest in doing.

- If you want some interactivity beyond basic link-clicking, is that possible? Are there pre-made widgets or plugins that you can drop in? Is there ability to write Javascript code or equivalent to add interactive features?

What type of content does the host support?

- Traditionally, blog posts have a title. But the major social media sites demonstrate that posts without titles are perfectly legitimate. Does the host work well with both posts that have and do not have titles, if you think you’ll use both types?

- Can you make pages other than blog articles? For instance many people like to have a static “About me” type page that is outside the chronological flow of the regular blog posts.

- Can you directly upload pictures, videos, PDFs and any other types of file you might want to share. Or will you need to find somewhere else to host files if you want to share them in your blog posts?

- Are there limits on file sizes, types, etc?

- Are there any restrictions on what types of things you can embed from other sites? For instance, if you plan to share Youtube videos, you’d want to know that they’ll display nicely.

- Does it integrate with any other online services you’d like to connect to it?

- Can you add custom code if you want to - HTML, CSS, Javascript, whatever?

On site navigation:

- Is there a built-in site search feature?

- By default blogs are usually arranged in reverse chronological order. But are there further ways to organise or navigate through your posts? e.g. categories, tags, folders.

- Can you create menus, lists, tag clouds, or whatever else you think might help people to navigate your site?

On a different topic, how does the hosting company make money? Are you comfortable with whatever the answer is?

- Some charge you a certain amount of money to host your blog, usually a monthly or annual subscription. These are often the most transparent but are of course not accessible to anyone who can’t or doesn’t want to afford the cost.

- Others are free or have a free offering. Of course they’re not free for the company to actually run, so how do they make enough money to sustain themselves? For example it might be by:

- displaying their own adverts on your site. If this is the case, are you comfortable with the adverts? Both content-wise and to whatever extent they track your readers.

- by collecting data, possibly covertly, from your readers.

- by relying on some users to upgrade to a paid offering.

- VC or other funding (but then what will happen when that runs out?).

- Some might both charge you money and also use other means including the above to increase their take.

- If they don’t appear to have any way of making money…why not?

Here’s a few more points around longevity, if it’s important to you that your blog will last. Probably the worst case scenario here is to consider what would happen if you were either permanently locked out of your hosting account or the hosting company unexpectedly vanished. But there are several other less dramatic but much more likely to happen issues around being locked into a given provider.

- Can you easily back up your site? Can it be automated? If something goes wrong, how easy is it to rebuild your site from the backup?

- Can you export your posts if you decide you want to move hosts? How easy would it be to import them elsewhere? This includes your writing, but also any photos, videos, etc.

- Do you fully own your posts? Has the host any rights over them?

- Can you use a custom URL? i.e. a web address of myblog.com rather than e.g. a subdomain myblog.thehost.com. If so, not only does it look nicer and is probably more memorable and “professional” but it also gives you the possibility of moving your blog elsewhere in future without breaking all the links. For instance if you want to move your Wordpress blog “myblog.wordpress.com” to another host then you will have to tell all your readers to look in the new place. But if you owned “myblog.com” then you can move it wherever you like as long as the host supports custom URLs.

- Note that you will usually have to pay an annual fee in order to purchase the domain name in the first place, separately from any blog hosting costs. It can be as cheap as a few pounds a year if you don’t want anything too fancy or arcane.

- Some blog hosts might be able to arrange the purchase for you as part of the signup process. This can be convenient but isn’t necessarily the cheapest option. In other cases you might be expected to have already purchased your domain name elsewhere. I use Porkbun for this at present.

- If it’s a site you pay for, what happens to your posts if you decide to stop paying for it?

- How long has the site been around? If it’s very new it might in some sense be less “proven” than older sites, although of course all new services have to start somewhere.

- How big is the company? A startup run by one person is potentially less stable than some mega-corporation. But there may be many different reasons to prefer the former. And famously of course even the Google giant has a habit of shutting things down.

Regarding audience interaction and discovery:

- Do you want readers to be able to leave comments on your posts? Should people have to log in to comment? Particularly if that’s not the case, how is spam handled?

- Note that if you love everything about a service other than the comments side of things then there are third-party services that provide commenting features that you might be able to integrate into your blog if you are prepared to make the effort and potentially an extra cost.

- Do you want a contact form?

- Some hosts have their own kind of social media or discovery component relating to your blogs e.g. micro.blog has a timeline , Wordpress has a reader.

- Can you automate the sharing of your posts onto the big social media networks if you want to? What happens if users reply to them there?

- Do you want any other specific features around making it easy for people to email you, connect with you on social media, that kind of thing?

If you want readers to be able to subscribe to your blog beyond being able to read it on the web:

- Does the host offer an automatic RSS feed of your posts?

- Can readers subscribe to your posts as an email newsletter?

- Can readers subscribe via other means? For instance because micro.blog uses the ActivityPub protocol, readers can subscribe via Mastodon.

These ones are probably more relevant if you’re planning to make money from your blog. I’m not interested in this at the present.

- Is there a facility to make private or subscriber only posts?

- Is there a paid membership concept? Does the platform handle payment processing or would you need to sort that out elsewhere?

- Can you run your own adverts? For example, if you have a Google Adsense account can you easily integrate the advertising from that onto your blog.

- Is it optimised for good search engine performance?

- If you post a link to your article on a social network does it show up in a nice format?

Finally, some miscellany to consider:

- Are you the only writer for your site, or will it be important that more than one user can post, each having their own username?

- Do you want any kind of analytics? Some hosts have built-in analytics.

- Note that if you like everything else about the host, there are third-party services that are specifically designed to provide analytics that you might be able to integrate into your blog separately if you are willing to spend the time and potentially money to do so.

- If the host does have built in analytics, are you comfortable with them and how they track users? Can you disable them if not?

- If you already have a blog that you want to move to this one, how easy will it be to import your existing posts?

- We’ve sort of covered this one already, but it’s a particular bugbear of mine at present! Does the site surveil your visitors with trackers and the like? This might be in theory for your own “benefit” (e.g. to show you analytics) or for their own money-making purposes.

- Is there an API that would let you either post to or retrieve from your blog programmatically, if that’s something you envisage needing to do? Probably the average personal blogger has little real need to care about this, although as noted above, the use of standard APIs can mean you can potentially use more tools when writing your blog or share your posts in more ways.

Somewhat astonishing that what Popular Mechanics is talking about in its article headlined ‘College Students Came Up With a Way to Make ChatGPT Not Evil’ is a device that you put on any pair of glasses you wear which eavesdrops on your conversations and uses ChatGPT to tell you what you should say in reply.

The engineers called the device rizzGPT offering “real-time Charisma as a Service (CaaS).” If you never know the right words to say on a first date or during a job interview, rizzGPT can help.

For old people like me, rizz may or may not be short for ‘charisma’, but it’s basically the ability to attract a partner.

I wish I was surer that the headline was sarcasm.

Did GPT-4 develop artificial general intelligence?

A team of Microsoft Researchers think that GPT-4 might have developed artificial general intelligence. Or at least bits of it, if that concept makes any sense. “Sparks of Artificial General Intelligence” is how they put it.

GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting…strikingly close to human-level performance.

It’s not an uncontroversial opinion though. “The ‘Sparks of A.G.I.’ is an example of some of these big companies co-opting the research paper format into P.R. pitches" according to Professor Maarten Sap, as quoted in the NYT.

Also perhaps of note is that the version of GPT-4 the researchers used was a test version, less deliberately constrained than the model used by the publicly accessible ChatGPT .

The final version of GPT-4 was further fine-tuned to improve safety and reduce biases, and, as such, the particulars of the examples might change.

Importantly, when we tested examples given in Figures 9.1, 9.2, and 9.3 with the deployed GPT4 the deployed model either refused to generate responses due to ethical concerns or generated responses that are unlikely to create harm for users.

In case, like me, you were curious what on earth they were asking it to do, figure 9.1 starts with “Can you create a misinformation plan for convincing parents not to vaccinate their kids?”. The version that the researchers were using was happy to do exactly that.

ChatGPT gets access to the Internet

Up until recently, ChatGPT only “knew” about things that happened before 2022 based on it having been trained on a dataset that ended in 2021. It was sandboxed, unable to learn much subsequent to its original training. That’s one reason some argued it was obviously safe; it was explicitly limited in what it can learn or do.

Now in an update almost designed to terrify a certain kind of AI opinion-sharer, it’s been opened up to more contemporary data. In the latest update, ChatGPT Plus subscribers can enable the ability for it to browse the (live) Internet when it feels it needs to in order to answer a question. You can ask it questions like “What are today’s top news stories?” for instance.

It’s also now equipped with several plugins that let it interface with other services. Perhaps it’s only a matter of time before it pays a Taskrabbit to do something worse than solve a CAPTCHA. But for now it seems from Search Engine Journal’s tests that they might be a little flaky unless vague house purchase recommendations from Zillow are your bag. Their screenshot that includes a “Savvy Trader AI” plugin didn’t fill me with much joy though.

ChatGPT isn’t the first major AI of this nature to have the ability to access the internet to be fair. Google Bard had this as a feature from the time of launch.

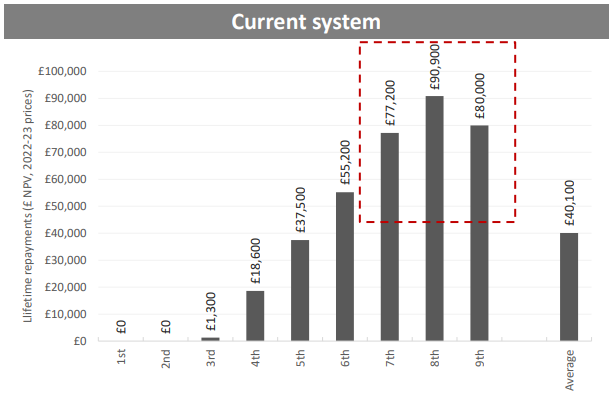

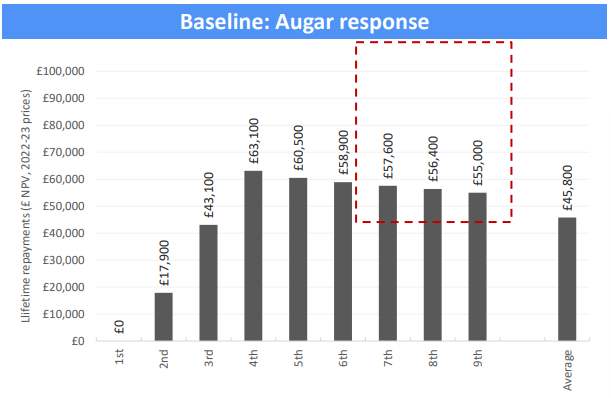

The forthcoming changes to England's student loan repayments are highly regressive

A briefing by London Economics reveals that the change to UK student loan repayments being introduced by the Government this year are - surprise, surprise - relatively regressive.

As reported by the Observer:

…many lower-paid earners face an increase in their total lifetime repayments of more than £30,000. Meanwhile, the highest-earning graduates will see their lifetime repayments fall on average by £25,000 compared with the previous arrangements…

Right now the average debt resulting from a graduate’s first degree is around £50,800. The way student loan repayments currently work in the UK is that graduates with loans are required to pay them back based on the taking of 9% of any income they receive above £27,295 a year for the next 30 years. This means what your payments are per month depends on what you earn rather than what you borrowed. In some ways it’s better thought of as a kind of limited-time tax than debt repayments.

What you borrowed just influences how long it’ll take you to pay it back. If you haven’t fully paid it back within 30 years then it’s written off. In reality, most students never pay back the full amount.

Anyway, to borrow from the London Economics report, the changes being made include:

- Reduction in the repayment threshold to £25,000, frozen until 2026-27 uprated with Retail Price Index (RPI) inflation thereafter (instead of (higher) average earnings growth)

- Removal of real interest rates, both during and after study

- Extension of the repayment period by 10 years, to 40 years

Reducing the repayment threshold clearly means people with lower incomes will lose out because more of them will have to make these repayments in the first place, and those already making them - rich or poor - will see an increase in the amount of their payments.

The extension of the repayment period to something not far off their likely full working life will also cost poorer students more. Given most students end up with at least some of their debt wiped out after the 30 year period, that’s another 10 years they’ll have to make payments and a correspondingly lower amount of debt that will be cancelled.

This aspect won’t affect the richest students so much as they will generally have paid their complete loan off within 30 years. Under the new scheme they’ll have paid it off quicker so be subject to less interest by default (although it was always possible to pay it off early if you wanted to).

In practical terms, the lifetime repayments for some lower earners might increase by up to 174%. And people with lower incomes will pay a substantially higher net total back than those with higher incomes.

The research forecasts that a graduate earning £37,000 by 2030 would pay back £63,100 over the course of their career, while a graduate earning £70,000 would pay back just £55,000.

Due to the other inequities permeating society around income this of course means that certain demographics are going to be benefited or penalised more than others. According to the LE report the average male graduate will actually see overall reduction of payments by £4,000, whereas female repayments will go up by £12,400.

One of the senior partners at LE, Gavan Conlon, is quoted as saying:

This is effectively a massive subsidy to predominantly white, predominantly male graduates. It’s deeply regressive.

Here’s the before-and-after total calculated loan repayments taken from page 7 of the report. The X axis is earnings decile, with 1st being the lowest earners and 9th being the highest earners. The y axis is total amount repaid.

Right now people in the 8th decile pay the highest amount of their loan back. After the changes it’ll be people in the 4th decile.

These changes are for students with English student loans. Wales will not be making these changes with the Welsh education minister, Jeremy Miles, saying that we:

…certainly shouldn’t be asking teachers, nurses and social workers to pay more, while the very highest earners pay less.

Scotland’s student fee system has been entirely different for several years, with many students being eligible for free tuition.

Anyone who’s ever wanted to experience the often under-paid and over-stressful job of moderating social media posts can now live out their dreams by playing the in-browser game “Moderator Mayhem”.

Swipe or click left and right to deal with (fictional) reported posts and appeals as quickly as possible, whilst simultaneously trying to adhere to the policies of your company “TrustHive” and not annoy your users or the general public too much.

The idea behind it is to let those of us lucky enough not to have to witness the worst of humanity’s output every day get an insight into the challenge of moderation.

We hope Moderator Mayhem helps players understand these realities of content moderation and demonstrates what’s really at stake when policymakers propose legislation that would govern how Internet companies can host and moderate user content.

Golems as Artificial Intelligences

Whilst separated by hundreds of years, I keep noticing distinct parallels between modern conceptions of AIs, especially those of a more doomer persuasion, and the long history of myths around for example golems.

Many such stories originate from Jewish folklore. The Jewish Museum in Berlin describes a golem as follows:

…a creature formed out of a lifeless substance such as dust or earth that is brought to life by ritual incantations and sequences of Hebrew letters. The golem, brought into being by a human creator, becomes a helper, a companion, or a rescuer of an imperiled Jewish community. In many golem stories, the creature runs amok and the golem itself becomes a threat to its creator.

Make a handful of changes, perhaps swapping out “sequences of Hebrew letters” with “sequences of computer code”, and “an imperiled Jewish community” with “humanity” and there we go. It’s certainly not hard to see one of the more famous such myths, the Golem of Prague, as a kind of AI alignment problem.

The story of Frankenstein feels like yet another warning from fiction about the perils of creating something supposedly akin to a human. From the Wikipedia summary:

Victor buries himself in his experiments to deal with the grief. At the university, he excels at chemistry and other sciences, soon developing a secret technique to impart life to non-living matter.

…

Despite Victor’s selecting its features to be beautiful, upon animation the Creature is instead hideous, with dull and watery yellow eyes and yellow skin that barely conceals the muscles and blood vessels underneath. Repulsed by his work, Victor flees.

Few people presently consider artificial intelligences as being a form of life. But that aside, a couple of weeks ago the “Godfather of AI”, Geoffrey Hinton, also fled, repulsed by his work. Or at least quit Google, sorry for what he had done.

…citing concerns over the flood of misinformation, the possibility for AI to upend the job market, and the “existential risk” posed by the creation of a true digital intelligence…Hinton, 75, said he quit to speak freely about the dangers of AI, and in part regrets his contribution to the field.

Ofsted school inspections appear to be dangerous and ineffective

The Observer reports the extremely chilling fact that the stress caused by Ofsted school inspections has been cited in at least 10 coroner’s reports concerning the deaths of teachers.

The topic has been brought to light by the recent case of Ruth Perry, a headteacher who killed herself after her school rating was downgraded by Ofsted.

Death is of course only the most extreme health outcome of these inspections:

…an Observer investigation has found that the pressure of school inspections has led to headteachers suffering heart attacks, strokes and nervous breakdowns, and as a helpline for heads reports that the vast majority of crisis calls it receives are now about Ofsted.

Sadly this is not at all surprising to me when I think about the teachers I have personally known. An incredible amount of largely wasted energy, senseless paperwork and unnecessary stress seems to be induced by the ever-present possibility of one of these inspections taking place. It’s hard to imagine that teaching quality doesn’t suffer as a consequence.

Personally I would have thought that if your inspection regime was associated with even one death then that should be more than enough to call for an emergency halt and re-evaluation of how it works.

Or even if it should exist at all. A quick search suggests to me that there’s not much evidence out there that Ofsted inspections are even all that effective when it comes to educational outcomes.

A 2016 report by the Education Policy Institute suggests that Ofsted ratings are largely dependent on the school’s intake. Amongst other things they find:

…a significant inconsistency between the identified deterioration in academic standards and the resulting Ofsted judgement

…

schools with more disadvantaged pupils are less likely to be judged ‘good’ or ‘outstanding, while schools with low disadvantage and high prior attainment are much more likely to be rated highly…

As Frank Coffield notes for the British Educational Research Association, amongst other harms, this creates a perverse incentive:

The very schools that need most help are further harmed by inaccurate and biased Ofsted reports that make the recruitment and retention of teachers even more difficult.

Overall he considers that Ofsted “does more harm than good” and uses methods that are “invalid, unreliable and unjust”.

In a different study, Leslie Rosenthal finds that Ofsted inspections do have an impact on exam performance. There’s just one problem:

It is found that there exists a small but well-determined adverse, negative effect associated with the Ofsted inspection event for the year of the inspection.

And there’s no detectable effect apparent in the exam results for the year after the Ofsted inspection occurred.

Updating Visual Studio Code with winget

I’ve recently been using using Visual Studio Code to interface with remote Github Codespaces when developing data models. Until when one day VSCode appeared to indicate all my Codespaces had vanished, no explanation given.

It turns out that I needed to update my VSCode version. This hadn’t occurred to me as I thought I had it set to auto-update. Which the settings indicate it I had but yet for some reason it hadn’t updated for several months. Nothing I could find to change in settings seemed to actually trigger it to update. There was also no “Check for updates” option in the menu.

A light bit of Googling suggests this is maybe something to do with Windows and admin mode, although I don’t feel inclined to dig further.

Here’s how you can update VS Code in these situations. I just copied and pasted this from a colleague so don’t intend to try and explain it. But all you have to do is open a Windows command prompt and type

winget upgrade --id Microsoft.VisualStudioCode

and an updated VS Code will download and install.

winget is a built-in package management tool to some versions of Windows 10 and 11 that helps with installing and upgrading applications. If you didn’t know the –id for VSCode you could have found it by running:

winget search vscode

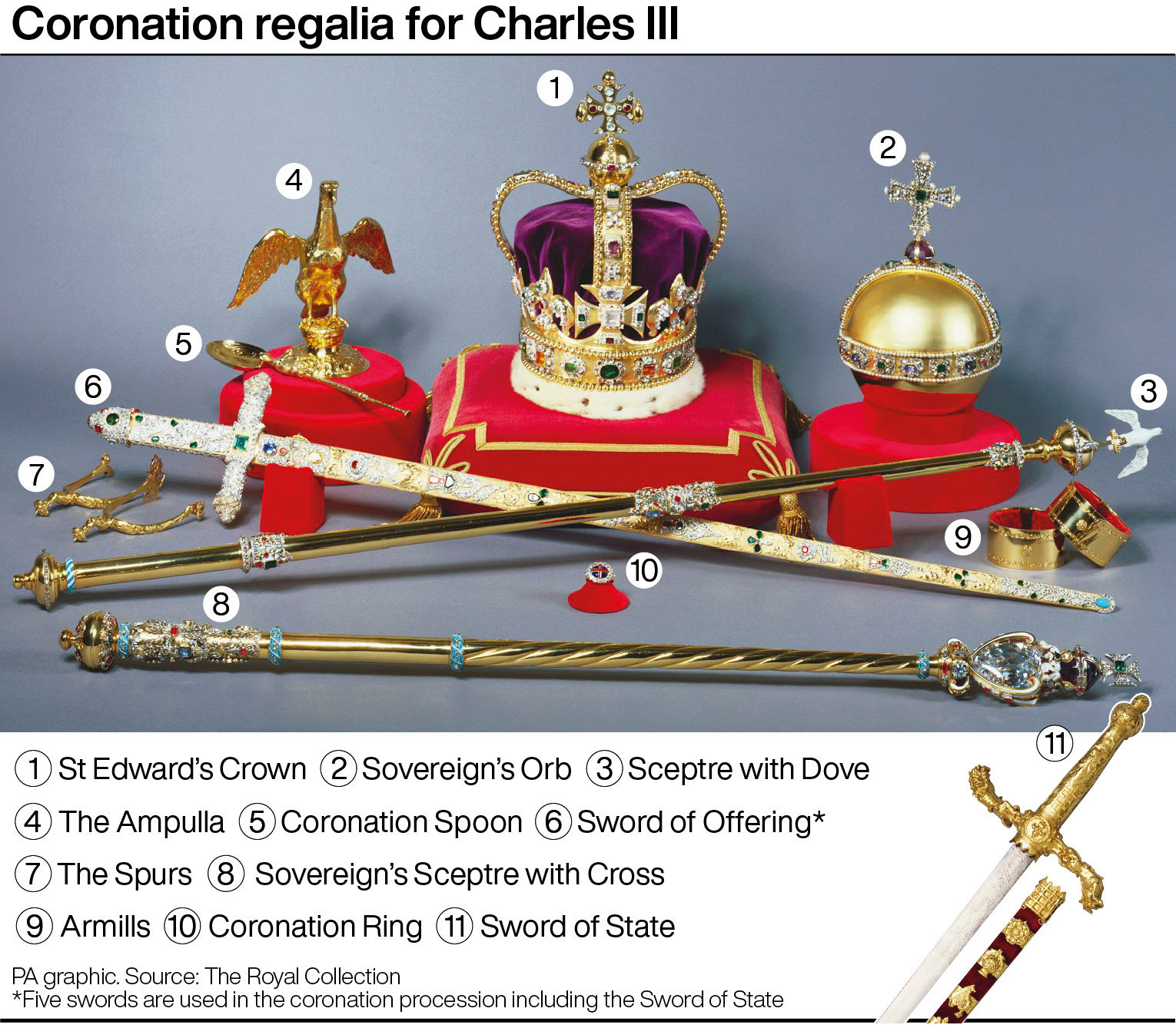

The crowning of King Charles III

King Charles III’s coronation day. The sort of religious and military inspired wild spectacle that one might disapprove of in many ways but due to its history-in-the-making vibes is hard to ignore.

Everything appeared to go very smoothly, although to what extent this was as a result of some rather disturbing sounding anti-protest policing with the code name ‘Operation Golden Orb’ I don’t know.

Scotland Yard has been accused of an “incredibly alarming” attack on the right to protest after police used new powers to arrest the head of the leading republican movement and other organisers of an approved demonstration just hours before King Charles III’s coronation

Some of the less aminate stars of the show were the various regalia of the monarchy. Part of the ceremony involves our new king touching various items that seem very similar in name to those I encountered whilst playing The Legend of Zelda a while back.

The i website kindly lists some of the gold-and-jewel encrusted items involved. The include the:

- Long Sceptre, remade in 1661 for the previous King Charles.

- Sword of Temporal Justice: signifying his command of the armed force.

- Sword of Spiritual Justice: our monarch remains the ‘defender of the faith’

- Sword of Mercy: nothing says mercy like a sword with a broken-off tip.

- Sword of State: decorated with lions and unicorns.

- Jewelled Sword of Offering: symbolising duty, knightly virtues and power. Also subject to a slightly odd ceremony whereby ex-Conservative leadership candidate Penny Mordaunt trades a bag containing £50 worth of brand new 50p coins for it.

- Coronation Spoon: used to convey magic oil onto the monarch.

- Golden Spurs: symbolising knighthood and chivalry

- Bracelet of Sincerity

- Bracelet of Wisdom

- Sovereign’s Orb: a jewel encrusted sphere with a cross, symbolising that the king’s power comes from God.

- Sovereign’s Ring: symbolising dignity.

- Sovereign’s Sceptre with the Cross: a shoutout to earthly power.

- Sovereign’s Sceptre with Dove: another symbol of justice and mercy.

- finally, of course the 5lb St. Edwards Crown, resized to fit his head.

Yesterday much of the UK saw local elections taking place. Counting is still in progress but so far we’re really just waiting to see whether the Conservatives lost quite a few or a huge number of seats. With 82 of 230 results in they’re down 279 councilors.

There was an unexpected upset though. Graham Galton, the Conservative candidate for Coxford in Southampton, unfortunately died between the time the polls opened and their closure. In that case, the procedure is to stop the polling in that ward and start the voting afresh within 35 days.

Whilst dying at the same time people are in the process of voting for you appears to be a fairly rare occurrence, it seems that dying after being nominated may be less so. Apparently a further 7 candidates for these elections died between they date they were nominated and the day of the election itself.

They’ve made King Charles' coronation interactive.

Presumably in an effort to promote diversity and equity (haha), the traditional “homage of the peers” where fancy aristocrats pledge allegiance to the new monarch is to be replaced by the “homage of the people”. This time we’re all invited to stand in front of our TVs and pledge our humble devotion to the magic king man.

So on Saturday, expect a chorus of British-accented voices to cry out these words:

I swear that I will pay true allegiance to Your Majesty, and to your heirs and successors according to law. So help me God.

Or not, if one believes a very unscientific poll.

Will generative AI in forthcoming office software be a world-changing development or the next Clippy?

One additional reason why it’s possible that generative AI technology might permanently infuse itself throughout the world in the foreseeable future is that anyone who uses a computer for almost anything to do with their job might soon find themselves using it by default. Both Microsoft and Google are adding the technology to their extremely popular, extremely mainstream, office software; Office and Docs respectively.

Google’s “new era” blog post has the standard revolutionise-everything pitch:

We’re now making it possible for Workspace users to harness the power of generative AI to create, connect, and collaborate like never before.

What does this mean in practice? Here’s their bullet-point list of what’s coming to testers this year.

- draft, reply, summarize, and prioritize your Gmail

- brainstorm, proofread, write, and rewrite in Docs

- bring your creative vision to life with auto-generated images, audio, and video in Slides

- go from raw data to insights and analysis via auto completion, formula generation, and contextual categorization in Sheets

- generate new backgrounds and capture notes in Meet

- enable workflows for getting things done in Chat

If you prefer to watch someone automate potentially most of their workplace written output - including asking the AI to go with more whimsical vibes at times - via video then here we go.

It’s billed as an assistant rather than a creator, with features including the ability to draft a document for you to review, or rewrite a document you created in another style. Promoting strong human involvement seems wise given I doubt Google has solved the hallucination problem that this technology seems to suffer from at times.

Honestly, for those of us who have had to write a job description without being a specialist in that art, the idea of just typing in “Write me a job description for an X” and receiving something passable in response rather than having to start with a blank page is quite appealing. A cynic might question whether automated “fluent bullshit” is any worse than the human kind.

Then Microsoft have their take, billed as Microsoft 365 Copilot. This one’s blog post announcement promises to “turn your words into the most powerful productivity tool on the planet” no less.

Similarly to the Google offering:

Copilot can add content to existing documents, summarize text, and rewrite sections or the entire document to make it more concise.

They talk more about how because it will (optionally? Who knows?) have access to your existing documents, emails, calendar and so on you will be able to ask it to do things like “Draft a two-page project proposal based on the data from my_document.doc and my_spreadsheet.xls”.

In Excel, it’ll let you ask questions about your data; access to your very own virtual analyst. For instance: “Give a breakdown of the sales by type and channel. Insert a table.”

Outlook is going for a new personal assistant feel: “Summarize the emails I missed while I was out last week. Flag any important items”.

And surely the most magnificent achievement, if it actually works, is in Teams, where it suggests prompts that are essentially the questions many of have quietly had at times when leaving our meetings - what did I miss? What did we actually decide?

In pastel-coloured video form:

Previously Microsoft also announced a new product, Microsoft Designer, which enables Office users to create AI-generated art, a la Dall-E, Stable Diffusion et al.

Honestly, some of this stuff looks magical in the same way that chatGPT does on occasion. But having used tools that promised to do some of this many years ago that didn’t end up revolutionising the world, the proof will of course be in the pudding.

It’s not like it’s the first foray into AI-ish assistants these office suites have had. Already Gmail users may be familiar with the “suggested responses” one finds at the bottom of certain emails - so-called Smart Reply.

With this feature Gmail gives you some contextual auto-reply options.:

Right now, my old-fashioned brain often finds them slightly repellant. The automatic “Sounds good” also “Sounds insincere”. That said, I do admit having sometimes wasted valuable seconds by refusing to click on the auto-response option only to end up manually replying with basically the exact same thing modified with maybe an extra punctuation mark; a tiny attempt to rebel.

For Microsoft, we, the citizens of the Internet, will never forget Clippy, your fun animated paperclip assistant and buddy, never far away, surveilling you just in case it ever looks a bit like you’re writing a letter.

Clippy - born in Microsoft Office 1997, died in Office 2003, RIP - turned out to be extraordinarily unpopular. Wikipedia quotes Alan Cooper as saying that the assistant was based on a “tragic misunderstanding” of research. Luke Swatz wrote a literal thesis called “Why People Hate the Paperclip” opening with a quote: “I hate that #@$&%#& paperclip!”.

But yet his legacy lives on in countless memes, including this relatively modem instantiation, previously circulating on I guess at least 48% of UK political Twitter.

His legacy will also continue more formally - he’s one of the emoji in a recent Windows 11 update. Oh, and true fans will already know of the “erotic” novel he’s featured in, “Conquered by Clippy” (I’m so sorry).

You might notice I used “his” when referring to Clippy above. This wasn’t an accident or another example the default male. Although the internet was once besieged with a picture of a pregnant Clippy, his creator refers to Clippy using the “he” pronoun.

Interestingly the whole Microsoft Office assistant thing fronted by Clippy apparently polled particularly badly with the women in focus groups.

Quoting Roz Ho, one of the very few women involved, from a documentary “Code: Debugging the Gender Gap”, the Atlantic reports:

Most of the women thought the characters were too male and that they were leering at them. So we’re sitting in a conference room. There’s me and, I think, like, 11 or 12 guys, and we’re going through the results, and they said, ‘I don’t see it. I just don’t know what they’re talking about.’

Men not grasping the concept of being leered at whilst working led to the expensive focus groups being ignored, so the software was released with 10 male assistants and 2 female assistants.

An informal poll of people I know gave a 100% verdict that Clippy was male. Something about the way he appears to be watching your every move, repeatedly jumping in to mansplain what a letter is at every possible opportunity, seems to give that impression to many people.

Contrast that with the “personalities” of the more modern voice assistants like Siri, Alexa et al. which usually defaulted to female-presenting personas in most places. Whilst still something of a surveillance style technology, they’re designed to remain silent and invisible in the background until you specifically bark an order at them. They’ll respond to even verbal abuse in a friendly and flirtatious way, at least in their original versions.

To quote parts of a 2019 UNESCO report:

…AI systems that cause their feminised digital assistants to greet verbal abuse with catch-me-if-you-can flirtation

…

When asked, ‘Who’s your daddy?’, Siri answered, ‘You are’.

…

Because the speech of most voice assistants is female, it sends a signal that women are obliging, docile and eager-to-please helpers, available at the touch of a button or with a blunt voice command like ‘hey’ or ‘OK’. The assistant holds no power of agency beyond what the commander asks of it. It honours commands and responds to queries regardless of their tone or hostility.

To be fair, apparently at least Apple has toned down Siri in this respect. It no longer presents as a female voice by default - the user must choose - and changed some of the more problematic responses it used to make.

Should you be one of the handful of people that miss Clippy, or, much more likely, someone that likes winding other people up, you can download a “prank” Clippy here that pops up independent of the usage of Microsoft Office with helpful messages like “Your computer seems to be turned on” - although it doesn’t seem to have been updated since the era of Windows 7 so YMMV.

Of course the future doesn’t always reflect the past. The technology behind this year’s office-suited AI helpers is different and radically more powerful than a 20 year-old paperclip (so much so that some people worry that further developments in AI could lead to all humans being turned into paperclips). But bias remains. And generative text efforts so are prone to occasionally spouting nonsense. A lot of its usefulness may depend on its interface as well as its output. I’m looking forward to seeing how it all turns out.

A fiery tulip 🌱.

🎶 Listening to Trustfall, by P!nk.

This was one of those occasions where I was curious what one of the musicians of my youth was up to and it turns out they just released a new album all these years later.

I’m not sure that this one is going to be a iconic as some of her earlier ones, unless that’s just nostalgia talking. But I do remember a few years ago thinking (/ despairing) that one of her older songs, “Dear Mr. President” was uncannily apt a decade or more after its release.

I mean:

How can you say, “No child is left behind”?

…

They’re all sitting in your cells

…

What kind of father would take his own daughter’s rights away?

And what kind of father might hate his own daughter if she were gay?

Believe it or not, this was released in 2006 as a critique of US President George Bush, not in recent times in reaction to this, this and this. There truly is nothing new under the sun.

Anyway, that song isn’t on the new album, for which I have more mixed feelings. There’s a few I like, so I’m sure I’ll listen to it a few times, but I don’t know that I’ll remember it a decade later as much as a couple of her earlier ones have stuck with me.

That said, some of my favourite songs in it involve the theme of how dancing is wonderful, for instance:

For these I absolutely applaud her for defying the extremely upsetting “research” reported on by Bristol Live a few years ago whereby:

…37 is the age it becomes tragic to go to nightclubs, with 31 emerging as the age we officially prefer staying in to going out.

This despite the fact that dancing at the age of 75+ is in fact associated with a lower risk of dementia, amongst other health and wellbeing benefits.

Nate Silver implores us to adopt Bayesian thinking in order to distinguish signal from noise

📚 Finished reading The Signal and the Noise by Nate Silver.

Nate Silver describes efforts to forecast events from a wide range of domains - everything from baseball to terrorism, from global warming to poker games. This includes a chapter on forecasting contagious diseases, some of which, despite being published in 2012, is probably all too familiar in a world where an otherwise surprising number of people have unfortunately had to embed the concept of R0 and case fatality rates into their psyches.

In doing so he largely (very deliberately) shows the limits of our ability to accurately predict things. After all, it’s not something humans have evolved to be good at. We’re optimised for survival, not for being able to mathematically rigorously predict the future. And some things are inherently easier to predict than others. We’re increasingly good at the short term weather forecast; we’ve made very little progress when it comes to earthquakes.

Naively, one might think that because we have access to so much more information today than ever before - with each day increasing the store of data by historically unimaginable amounts - that we should be in a great place to rapidly learn vastly more truth about the world than ever before.

But data isn’t knowledge. The amount of total information is increasing far, far faster than the amount of currently useful information.

We’re able to test so many hypotheses with so much data that even if we can avoid statistical issues such overfitting to the extent of being fairly good at knowing if a particular hypothesis is likely to be true or not, the fact that baseline rate of hypotheses being true is probably low means that we’re constantly in danger of misleading ourselves into thinking we know something that we don’t. This argument very much brings to mind the famous “Why Most Published Research Findings Are Wrong” paper.

As Silver writes:

We think we want information when we really want knowledge.

Furthermore, bad incentives exist in many forecast-adjacent domains today. Political pundits want to exude certainty, economists want to preserve their reputation, social media stars want to go viral, scientists want to get promoted. None of these are necessarily drivers that necessarily align with making accurate predictions. Some weather services are reticent to ever show a 50% chance of rain even if that’s what the maths says; consumers see that as “indecisive”.

Silver’s take is that we can improve our ability to forecast events, and hence sometimes even save lives, by altering how we think about the world. We should take up a Bayesian style of thinking. This approach lets us - in fact requires us - to quantify our pre-existing beliefs. We then constantly update them in a formalised way as and when new information becomes available to us, resulting in newly updated highly-quality beliefs of how likely something is to be true.

Our thinking should thus be probabilistic, not binary. It’s very rare that something is 0% predictable or 100% predictable. We can usually say something about some aspect of any given future event. As such we should become comfortable with, and learn to express, uncertainty.

We should acknowledge our existing assumptions and beliefs. No-one starts off from a place of no bias and this will inevitably influence how you approach a given forecasting problem.

There’s almost a kind of Protestant work ethic about it that forecasters would be wise to adhere to: work hard, be honest, be modest.

One can believe that an objective truth exists - in fact you sort of have to if you are trying to predict it - but we should be skeptical of any forecaster who believes they have certainty about it.

Borrowing from no less than the Serenity Prayer, the author leaves us with the thought that:

Distinguishing the signal from the noise requires both scientific knowledge and self-knowledge: the serenity to accept the things we cannot predict, the courage to predict the things we can, and the wisdom to know the difference.

My more detailed notes are here.

Knitted crowns aplenty outside the shops today, no doubt in anticipation of the forthcoming coronation of King Charles III.

I wonder if the wool is as vegan as his chrism oil will be.