Recently I read:

The left is missing out on AI: ‘…ceding debate about a threat and opportunity to the right.’

In 1984, An Unemployed Ice Cream Truck Driver Memorized A Game Show’s Secret Winning Formula. He Then Went On The Show: …and became rich, for a while.

'Outrage' erupts as Keir Starmer moves a painting to another room

It’s interesting, if somewhat predictable, what passes muster for making it to the status of a wannabe British political scandal in more normal, less actually scandalous, times. The latest example I know of is the “outrage” at Keir Starmer moving a painting to another room in his house.

Feigned outrage? Very likely, although the modern-day Conservatives do seem in absolute thrall to the subject of the painting in question, Margaret Thatcher. The whole thing kind of feels Britishcore to me, although that may be my bias.

In any case, in the end Starmer seemed to have even felt the need to take the time to explain himself - “I like landscapes”.

Compare that Very British Infraction to, for example, the recent example across the pond where the Republican nominee for the governorship of North Carolina was revealed to have made some rather disturbing comments on a porn chatsite whilst, in public, banging on about “protecting women” in all the usual US culture war ways.

The era of Britishcore is upon us

Apparently we’re in the aesthetic era of Britishcore. I’ve enjoyed learning about the cultural ‘cores, at least ever since the days of cottagecore. Corecore was of course a highlight in terms of its naming - a “screaming-into-void” aesthetic according to Vice. There’s also been goblincore, fairycore, cluttercore and weirdcore.

In any case, to be Britishcore is to follow the “practice of embracing the naffest bits of British culture”. At least the traditions that someone (perhaps Tiktok?) has decided are lame, dated and naff - which I fear at times risks verging into the classist, but not necessarily so.

Here’s 100 examples, courtesy of the Guardian. Although that article hasn’t gone down overly well with everyone. An article in the New Statesman described it as being not “just unfunny, it was disturbing” and having the “the dead sheen of marketing copy”.

Now Hezbollah's walkie-talkies are exploding

First it was their pagers. Now it’s Hezbollah’s walkie-talkies that are exploding.

The latest attack has killed or injured a few more hundred people, Hezbollah members or otherwise. It’s apparently seeming more and more likely that it’s the work of Israel, although no one formally claimed responsibility yet.

Whoever did it though, it’s likely against international law. Per the UN human rights commissioner:

Simultaneous targeting of thousands of individuals, whether civilians or members of armed groups, without knowledge as to who was in possession of the targeted devices, their location and their surroundings at the time of the attack, violates international human rights law and, to the extent applicable, international humanitarian law

Human Rights Watch feels similarly, based on the indiscriminate nature of using devices whose location and proximity to civilians could not be reliably known.

New phones aren't all that more exciting despite what Google claims

I believe Jason has previously noted on his blog that new phones are boring - although I can’t find the specific post. I guess it’s not just me being older and more tired that made me feel this way.

This may be no bad thing overall in that the excitement upon new phone releases many years ago likely led to a ton of unnecessary e-waste. Per the World Economic Forum, keeping your phone for longer is better even than recycling its components.

While recycling smartphones is required when phones truly reach end of life stage, keeping phones in use for longer (and therefore minimizing the number which actually need to be recycled) keeps materials in use for longer, reduces waste streams, and means less energy is required for recycling processes.

“The greenest smartphone is the one you already own” says a CNN article that lists a few options you have rather than binning your actually still fine phone.

In any case, I’m more than happy to keep my phone until it simply doesn’t work any more, typically several years. Even my inner tech geek doesn’t feel like I’m missing out.

The companies that produce these phones seem to have picked up on these vibes and are naturally desperate to make us think their phones are new and special.

An advert for the new Google Pixel 9 phone feels like it’s been continuously showing in my household. Much as I’m loathe to spread the propaganda, here it is:

The magic is back

Remember when all phones did pretty much the same stuff until they didn’t?

So this is a new phone that can do magical new things we’ve never seen before. Except the two examples that they attempt to dazzle us with are in fact not new things at all. My several-years-old basic model phone has done them for quite some time.

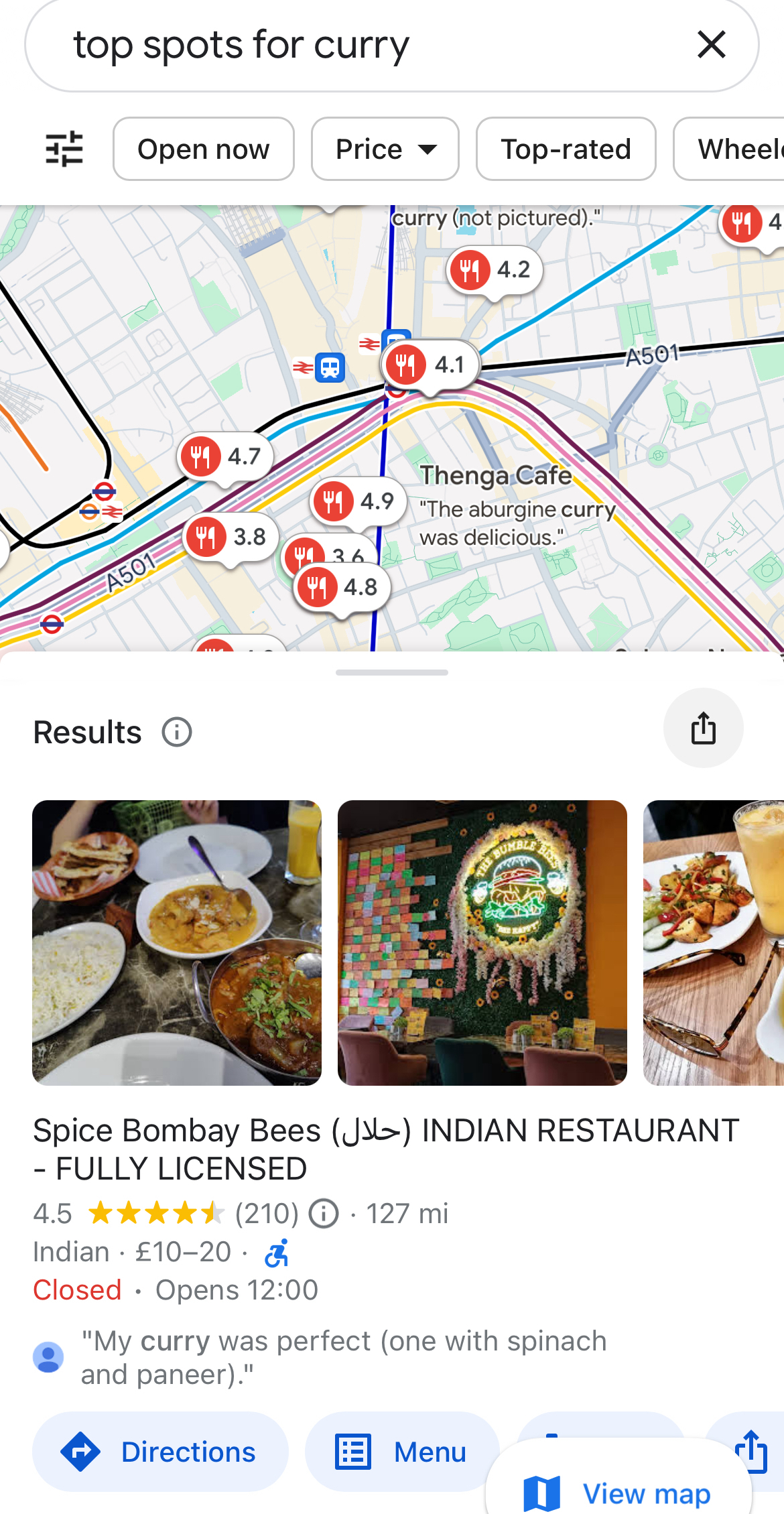

Sticking pins on a map of your locality corresponding to well reviewed restaurants has been a basic function of mapping apps for years. Here is me asking the same kind of question of Google Maps - done with my voice even if you can’t tell that.

And it works fine - or at least as fine as I imagine it works on the fancy new phone.

Granted, maybe the Pixel 9 does it in a different way behind the scenes. I have no idea. But I also don’t care about that unless the results are dramatically better. Of course if they, like many others companies, decided to shove generative AI into everything no matter how unnecessary then I’d imagine the results might not be better at all. Witness Apple’s awkward disclaimer on the the generative AI output on its new phone - “Check important info for mistakes”. That feels like work.

The second task Google demonstrate - having AI “watch” a video and answer a question about it - is a bit of a new innovation in terms of only having been available in the mainstream for the past few years, really since the advent of generative AI as far as I know. But it’s wrong to imply that only the Pixel 9 can do it when in fact the majority of modern smartphones can do it.

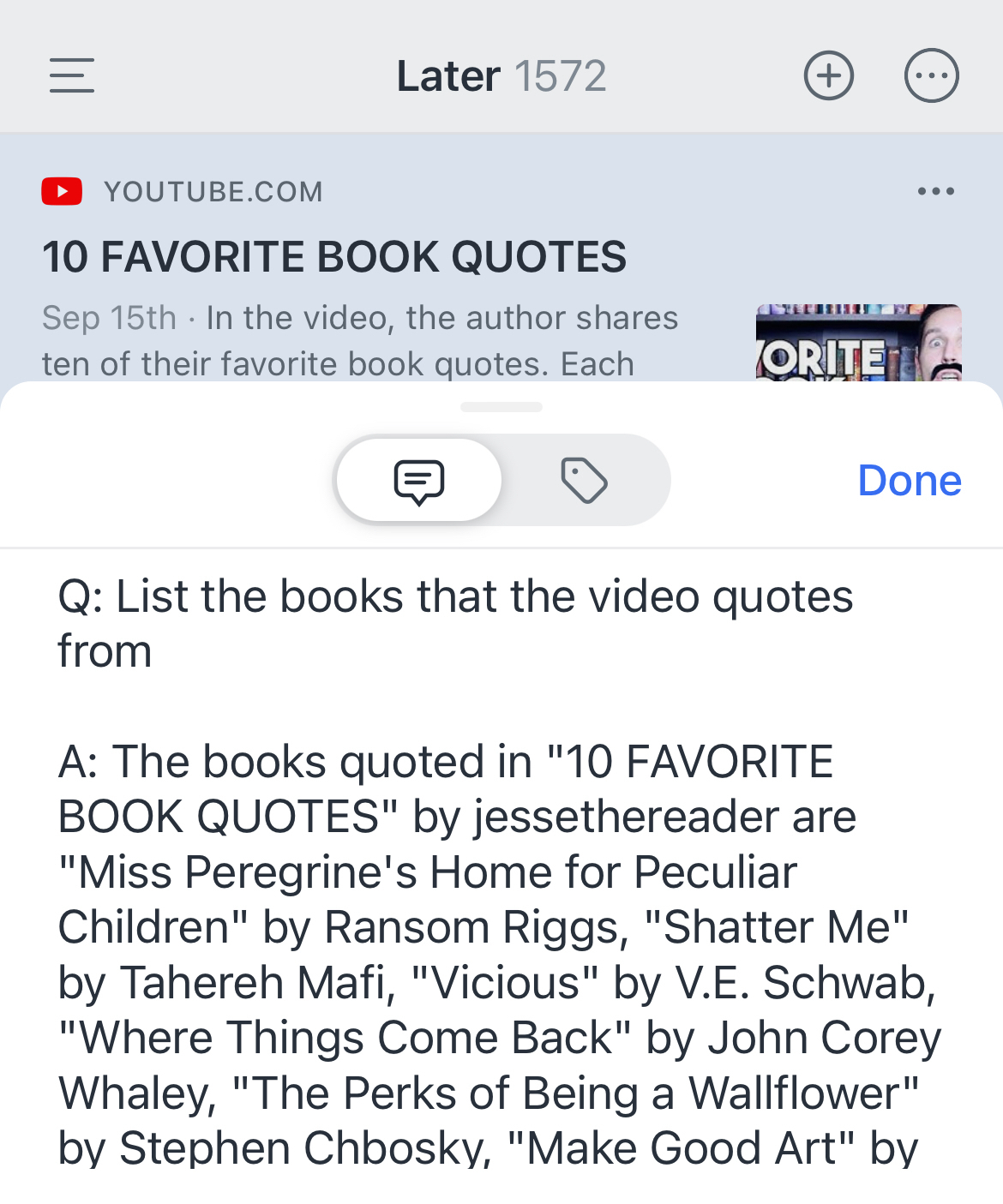

Because at the end of the day this is software thing, and likely a cloud thing at that. I imagine there’s several services out there that have enabled the same sort of task for a while now. Personally I’m a user of the Readwise Reader app which, via its “Ghostreader” function, has a similar ability to let you “ask questions” of Youtube videos. It’s not a feature I ever use - typically if I have loaded up a video it’s because I would actually like to watch a video, although I understand there are valid use-cases where you might want to do otherwise - but it’s there.

Here’s the results of me asking for references from a random Youtube video that contains some.

10015 is a useful website containing a ton of quick little web tools that handle a set of fairly mundane but cropping up surprisingly often computing tasks, mostly around text and images.

Some examples include mini tools that:

- change the case of text

- resize or crop images

- format code

- encode URLs

- get a colour code from an image

- pixelate part of a photo

and much more.

Saves you downloading a ton of single-use apps or attempting to penetrate multiple websites full of interstitial adverts to get your job done.

Remote cyber-attack on Hezbollah's pagers causes thousands of injuries and deaths

In what sounds like a plot of a fairly implausible science fiction story, hundreds of pagers belonging to members of Hezbollah exploded yesterday in what appears to be an very well coordinated, very sophisticated, attack against the organisation.

Unfortunately, whatever one might think of Hezbollah, some definitely innocent victims got caught up in the assault. At least two of the 12 people known to have died as a result were young children. Around 3,000 people were injured.

It’s still very early days, but the leading theory of how this could have been carried out looks to be that the pagers' hardware supply chain was interfered with several months ago.

The idea is that small explosive devices may have been inserted next to the pager batteries which were then, months later, remotely detonated via a radio signal.

After all, as a bomb disposal expert noted, a pager already has 3 of the 5 components necessary for an effective explosive device:

- container

- battery

- triggering mechanism

- detonator

- explosive charge

Another idea out there is that a hacker, or some previously-infiltrated malware, might have been able to cause the pager batteries to simultaneously overheat, resulting in the devices exploding.

But whilst overheating consumer devices igniting in dangerous circumstances are a real thing, it seems like experts think that the nature of the explosions likely pointed towards an actual explosive charge being involved.

🎶 Listening to HIT ME HARD AND SOFT by Billie Eilish.

The world’s most famous whisperer is back with a third album.

It was a bold move to release it fairly soon after Taylor Swift was in the process of breaking every record going with her 2024 album. But it doesn’t seem to have done Billie too much harm beyond costing her the top spot on the albums chart. 193 million streams in the first week is pretty good going.

🎶 Listening to Radical Optimism by Dua Lipa.

Described in part as being influenced by the neo-psychedelia genre - one I admit I had not heard of before - the album was apparently written to reflect the concept of radical optimism that she’s working into her life.

I want to capture the essence of youth and freedom and having fun and just letting things happen, whether it’s good or bad. You can’t change it. You just have to roll with the punches of whatever’s happening in your life.

So it’s a pretty upbeat set of songs, even when dealing with the various obstacles life brings.

🎶 Listening to ~mAntras~ by Alien Ant Farm.

These are the guys who made the almost indisputably best version of Michael Jackson’s “Smooth Criminal” - less smooth than the original, sure, but criminally good - a rather upsetting 23 years ago.

And here they are decades later, fitting into the category of presumably aging rockers (nu-metalers?) that still sound at least mostly like they did when I was a kid, in my mind at least. Impressive. As always in these circumstances, hard to tell whether nostalgia plays some part in my enjoyment of their latest album, but either way, I find it to be very good.

Another one that makes me wish Guitar Hero was still a thing.

Save videos, photos and audio from your favourite sites with Cobalt

Colbalt.tools looks to be a very useful web tool that lets you download and save video, audio and pictures from several streaming sites (Youtube, Vimeo et al.) as well as those attached to social media posts (Twitter, Tiktok and many more).

cobalt lets you save anything from your favorite websites: video, audio, photos or gifs — cobalt can do it all!

no ads, trackers, or paywalls, no nonsense. just a convenient web app that works everywhere.

Clearly this could be misused. But if, in this world of constantly vanishing content, there is something out there that’s meaningful to you then this would be one method to get yourself an archive copy without exposing yourself to tools ridden with adverts, trackers, malware or whatever else this type of tool often seems to come with.

You can also do a few more advanced things with it should you need to, including:

- extracting audio from a video - that’s something I’ve had cause to do with Youtube in the past.

- specifying the codec or video quality you want.

- remuxing an existing file to increase its compatibility.

It’s free, open source and self-hostable. Very good stuff. You can donate to the project here.

Recent research provides some (weak) evidence that labelling menu items with calories might cause harm to some

Something I feel myself gradually changing my mind on is the ubiquitous labelling of how many calories each food contains on restaurant menus.

The basic idea there is that if consumers see that some restaurant food choices options have a very large amount of calories in them then they might be less likely to pick them and, as such, consume fewer calories. This would add up so in the long term they’d be less likely put on excess weight with all the concomitant difficulties and associated conditions which that can entail for some.

There might also be some side-effect benefits too, such as restaurants or food producers providing less calorific meals because they don’t want to have a menu full of dishes that exceed the average person’s recommended calorie intake in one ago.

I never felt there was much likelihood that the labelling initiative would actually have a big effect on behaviour, let alone health. It always seemed a bit “this seems like common sense so let’s assume it works” rather than “we have evidence it works so let’s do it”. Intuition over evidence; something that unfortunately rife and occasionally dangerous in the world of weight loss amongst others.

Another potential defect came from its seeming centring of the issue of overweight and obesity on the incorrect, stigmatising and counterproductive idea that the large increase in the proportion of people living at higher weights reveals something about the people concerned being ignorant or making “bad” choices; as though in recent years some evil wizard cast a magical spell over us all that made most of us into foolish greedy gluttons.

This way of thinking is harmful nonsense. It also puts all the “blame”, for want of a better word, on us as individuals and lets us ignore the structural factors - the things that are harder to think about, more complicated to act on and often less favourable to the profits of associated businesses - that are so clearly at play.

Since then most of the research I’ve seen about the various labelling initiatives seems to have confirmed that indeed we’re not going to reduce the rate of obesity meaningfully by making it clear how many calories a Big Mac has at the point of sale. It may even be a entirely ineffectual intervention in terms of clinical significance in the long term.

But, my scepticism on its likely efficacy aside, I saw no significant harm in trying it out as a policy - plus have a personal bias towards always wanting more information, more data, to be made available. It felt like it may even have shifted my own eating behaviour a tiny amount.

But I’m privileged enough that I’m not someone the policy was ever likely to harm. However, critically, those people may well exist. And if so, well, calorie labelling is now mandatory in the UK, a policy that most of the population seems to have been in favour of, so it’s a potential concern we should look to mitigate.

The evidence on the harms side is scant so far to be fair, so caveat emptor, but there are hints of adverse effects showing up in some recent research.

A recent paper by Brealey et al reviewed some of the literature on the impact of food labelling on people that have eating disorders (ED).

They found four studies - listed and summarised as part of this table - that use qualitative methods to end up suggesting some potential for harm.

The researchers summarise the results as:

Recurrent themes describe how calories on menus: can lead to a hyper-fixation on calories, restrict food freedom (meals are chosen for their calorific value rather than what was actually wanted or appropriate for hunger levels), reduce eating out opportunities, increase feelings of anxiety, guilt and shame around food choices, and inhibit ED recovery.

Some participants in these studies expressed anger over the messaging from ‘trusted’ public health authorities on the normalisation of calorie counting, because calorie counting had played a pivotal role in the development of their ED

To be clear, the feelings on labelling weren’t all negative for the participants concerned. Some saw benefits. Many of them also felt that calorie labelling may overall be a net good thing even if it might put them themselves at risk.

The researchers found only a single study that looked at more objective changes to the symptoms of eating disorders themselves, carried out in a university café. That work found no significant effects of labelling on some symptoms associated with eating disorder, but also came with a large number of limitations - not least that the participants hadn’t been formally diagnosed as having a ED.

So honestly the evidence base for harms also seems pretty weak at present. There’s simply not enough research out there to form much of a strong conclusion. And almost none that looks at objective rather than subjective harm in terms of ED symptoms - not to suggest that any types of harm should be disregarded.

But on the other hand, given the dearth of evidence that labelling actually leads to much of a relevant change in peoples' behaviour, let alone any positive change in their health, the risk equation shifts someway in the anti-labelling direction when any evidence, even if it’s weak, for the potential for harm comes to light.

Without wanting to be a cliché, this is one are where “more research is needed”.

UniGetUI is a handy GUI for Winget and other Windows package managers

If you’re a Windows users who likes using Winget as a package manager, as I do, but would prefer a graphical user interface over hitting the command line then UniGetUI - formerly known as WingetUI) might be the app for you.

It’s a free open-source utility that lets you install, update and uninstall apps via Winget with mere clicks of a mouse.

That wouldn’t interest me all that greatly - I rather like typing commands - except for that it comes with a few extra functions that I don’t think are available in default Winget. Some I like are:

- It can automatically scan for updates to your existing apps on a schedule. If it finds one it’ll notify you so you can choose to update it there and then or not (you can exclude certain apps if you never want to update them).

- It can install, update or uninstall multiple selected packages at once - not just “one or all”.

- It can export a list of some or all of your installed applications (plus any custom installation parameters you installed them with). Then in the future you can import that same list again to a different or newly reformatted computer and it’ll install everything in it in one fell swoop using the same configuration.

If you’re into a different brand of Windows package manger - Chocolatey, Scoop, Pip and more - then the reason the app renamed itself from WingetUI to UniGetUI is because it now supports those package managers too. So you can recursively manage multiple package managers from one place now if that tickles your fancy.

Where is the boundary between blue and green?

ismy.blue is a weirdly fascinating website whereby it shows you a page that has a background colour of something in the blue-green spectrum - anything between a HSL hue value of 120 and 240.

You then hit a button depending on whether you’d call it blue or green if you were forced to say. The screen refreshes to another place on the same spectrum and you keep making the same blue vs green decision until it narrows down to something approximating what must be the boundary between what you consider to be blue and what you would say is green.

Apparently for me it’s somewhere around hue 175.

This means that for me to call something blue it has to be “more blue” than it would be for 59% of the folk who took the test. So if forced to say whether turquoise was blue or green I apparently be more likely than most to pick green.

My monitor isn’t the best and, as the site notes, that sort of thing is one of many factors that are bound affect the results - so it’s very much “for entertainment purposes only”.

On a similar subject, here’s a handy site that converts from hue values to CSS-style hex colour values.

Fake streamers listening to fake music by fake artists on Spotify allegedly made a single fraudster at least $10 million

In a perfect example of the potential made available by the deleterious effects of bad business models music streaming services, someone has been charged with defrauding Spotify to the tune of $10 million dollars.

The allegation is that Michael Smith created a ton of low-effort “music” - of course eventually turning to the tools of artificial intelligence after he realised he couldn’t make nearly enough money from his own more natural efforts. He then invented a big pile of bands that don’t exist to use as the supposed artist accounts that this music could be uploaded to Spotify under.

He then created a huge number of fake accounts for imaginary people - maybe as many as 10,000 - in order to “listen” to that music, which he did via streaming from a variety of computers that appeared to be in different places.

Because it was so many different songs from so many different artists listened to by so many different people - all fake - he wasn’t detected for a while.

It seems it was very much financially worthwhile for him, at least for a while.

From the NYT’s report on this:

According to a financial breakdown that he emailed himself in 2017 — the year that prosecutors say he began the scheme — Mr. Smith calculated that he could stream his songs 661,440 times each day. At that rate, he estimated, he could bring in daily royalty payments of $3,307.20 and as much as $1.2 million in a year.

Of course, because of how Spotify pay-outs work - in simple terms they take the revenue they make from user subscriptions and advertising and divide it up between the artists based on what % of streams the artist receives - presumably this was to the direct financial detriment of other, real, artists (and all the other organisations that also take a cut before it reaches the artist).

A friend gets in touch to let me know that it’s not necessarily scary AI cyber-brains that are going to end humanity. We also need to be wary of robotically-augmented mushrooms.

I’m not sure what sci-fi novel this particular bunch of researchers last read, but something has compelled them to invent a robot which is controlled by the whims of living fungi.

The biohybrid robot uses electrical signals from an edible type of mushroom called a king trumpet in order to move around and sense its environment.

Developed by an interdisciplinary team from Cornell University in the US and Florence University in Italy, the machine could herald a new era of living robotics.

I feel like I could probably outrun it in its present incarnation though:

The Independent article we learned about this in also revealed the existence of a project called OpenWorm which is looking to upload a digital replica of a worm’s brain into some Lego.

📄 Reading the Pivot to AI blog.

This is high-grade fodder for those of us who harbour a significant amount scepticism for the recent boom in AI, especially of the generative type.

Don’t get me wrong, when this stuff works it can seem like magic, and I do use it in small doses many days. But it really doesn’t need to be shoved into every vaguely-digital product, forced down every throat, and used as a threat to the job security of any folk who might well actually be doing their job way better than a robot brain can - even when the AI often exists only because the companies involved took-without-asking the work of the human workers concerned in the first place. Of course improving quality of output isn’t the real reason people are being “replaced”.

The blog reminds me a bit of Molly White’s justly iconic Web 3 Is Going Just Great blog. But instead of incessant reports on the pointlessness, scamminess and criminality that appears to be infused into the soul of blockchain-world, here we see frequent examples of where we’re burning natural resources and consuming vast amounts of money and compute on AI rather than crypto in the name of getting something rather worse than what we had before.

Sample quote from the most recent post:

The main use case for LLMs is writing text nobody wanted to read. The other use case is summarizing text nobody wanted to read.

More events in what is starting to feel something like a global backlash against some of the big social media companies.

We’ve previously seen the arrest of Telegram’s founder, Pavel Durov. Now, elsewhere, Brazil has totally banned the use of Twitter (OK, “X”) for anyone who lives there. on the basis that they’re refusing to appoint a legal representative in Brazil. This is something all foreign companies that operate in Brazil are required to do.

Once again, I know far too little about the situation to judge the rights and wrongs of the ban. Or for that matter its enforceability. Although, at least in theory, X is to be cut off at the ISP level, and hefty fines await any Brazilian users who use VPNs to evade the ban.

It might be noted that this follows Elon Musk, owner of X, becoming upset one of Brazil’s judges asked him to suspend the accounts of users he claimed were spreading disinformation. Elon is of course not known for maintaining his cool when someone does anything other than agree wholeheartedly with him. X is now claiming that the Brazillian judge was making “illegal orders to censor his political opponents.”.

Whatever the ins and outs of the situation, it seems a good amount of Brazil’s ex-X users aren’t all that disheartened by the news, or at least not to disheartened they can’t muster the energy to post through it. They just went somewhere else.

These events have apparently caused a huge wave of signups to X-rival Bluesky - setting an all-time-high record for activity on that platform, plus a substantially lower but above zero number to Mastodon.

From the recent reporting by “Last Week in Fediverse”, it sounds the Brazillian users have also made Bluesky a lot more of a fun place to be.

Research suggests that GLP-1 pharmaceuticals can prevent Covid-19 deaths

Some new research is out about that category of drugs which at times seems to be a class of pharmaceuticals that singlehandedly could solve half the world’s ills , at least for rich folks who can afford it - GLP-1s.

The new paper by Scirica et al. concerns a randomised controlled trial where researchers provided Semaglutide (aka Wegovy) to patients with overweight or obesity with a view to learning whether it helped prevent cardiovascular (“CV”) problems, including CV-related death.

The drug didn’t seem to do anything to alter the rate at which participants caught Covid-19. But, for those that did catch Covid, it helped prevent them from suffering from serious adverse events - including death - from it when they did.

Semaglutide did not reduce incident COVID-19; however, among participants who developed COVID-19, fewer participants treated with semaglutide had COVID-19–related serious adverse events (232 vs 277; P = 0.04) or died of COVID-19 (43 vs 65; HR: 0.66; 95% CI: 0.44-0.96).

The study reports that it isn’t only deaths from Covid that decreased - they saw lower rates in terms of all-cause deaths - a reduction of 19% - as well as both cardiovascular and non-cardiovascular related deaths when broken down in that way.

Participants assigned to semaglutide vs placebo had lower rates of all-cause death (HR: 0.81; 95% CI: 0.71-0.93), CV death (HR: 0.85; 95% CI: 0.71-1.01), and non-CV death (HR: 0.77; 95% CI: 0.62-0.95).

Of course no study is perfect, so it’s worth reading the full thing to understand some of the limitations. But it is nonetheless a result that was described as “stunning” by Dr Faust, who wrote an editorial in the same issue of the journal.

Having obesity is a risk factor for suffering greatly or dying from Covid, so this is a group of participants who were more vulnerable to the disease in the first place; albeit it’s not a small group; over two-thirds of American adults live with overweight or obesity.

As far as I can tell the jury is out as to whether it was solely the weight loss associated with this drug that provided protection from Covid’s worst effects - the participants who used this drug did lose 5kg more weight than the others on average 1 year in - or whether there is some other mechanism at play. Doctors Maron and Faust, as quoted in the NYT, think there’s potentially something else going on as well, potentially around the drugs leading to a reduction in chronic inflammation.

I continue to believe that the irrational efforts some people seem to think we should go to to prevent people who could benefit from these drugs actually getting them is nothing short of deadly in some cases. Treating obesity can save lives.

Another great free e-books offer from Verso, a publisher that continues to release at least one book I’d like to read every month. Three temporarily £0 books on the subject of political dissent, released in solidarity with the recent campus protests regarding the Israel-Hamas conflict.

Telegram is only end-to-end encrypted if you solely use 'secret chats'

I haven’t looked into the arrest of message-app Telegram’s founder Pavel Durov enough to form an opinion, but one thing I did learn from a recent Guardian newsletter on the subject was that Telegram is a lot less private and secure than I’d imagined.

I’d imagined it as an alternative to fully encrypted messaging services like Signal that I just hadn’t had any reason to use much so far. But despite its home page promoting it as being privacy oriented - “Telegram messages are heavily encrypted and can self-destruct”, in reality the service is not end-to-end encrypted for the most part.

Whilst all messages are “basic-encrypted” such that your internet provider or anyone intercepting communications between your phone and your Wifi router couldn’t read them, only DMs sent via the “secret chats” feature - an opt-in feature that you have to discover and enable yourself - are end-to-end encrypted. Everything outside of that, including all messages in group chats and broadcast channels, is not end-to-end encrypted.

A lack of end-to-end encryption means that in theory anyone with the correct access - legitimately acquired or not - to Telegram’s systems could in theory read your messages. In addition, Telegram would be able to hand your correspondence over to any authority that requests them.

It’s an ongoing debate as to whether this is a net good or net bad thing of course. But if you came to Telegram for its privacy, well, you have to go to some effort and also stop yourself using most of its features if you want to be absolutely certain that no-one other than your intended recipient can read your message.

All in all, if you want truly private messaging that not even the service’s CEO - or a hacker using their account - could see, then from an encryption point of view it turns off you’re likely better off using Signal or even, believe it or not, Meta’s WhatsApp (! A cynic might say that this is a sign that it wasn’t Meta that invented WhatsApp, they just bought it). Both Signal and WhatsApp are end-to-end encrypted by default.

That’s not to say even those two services know nothing about you. It just means they don’t know the content of your messages, which is often people’s first concern - even though metadata can on occasion give away rather more information about you that you might expect.

Signal makes a big deal about how little it stores even on the metadata front. They basically know when your account was created and when it was last used, so that’s all they can give to the authorities when legally compelled to do so. WhatsApp on the other hand may apparently know when and where you were when you used the app, the names of your group chats, and has the ability to record which phone numbers your number chats too if legally required to do so.

iMessage or Google’s message apps are also apparently contenders for end-to-end encryption fans but only if you’re absolutely sure that everyone you talk to is using the same type of phone and same chat app. Any convo that involves an iMessage green bubbler for instance isn’t end-to-end encrypted. You also apparently give Apple the keys they need to decrypt your iMessages if you back them up to iCloud using the default settings.

🎥Watched Legally Blonde 2: Red, White & Blonde.

Well I just watched the first. I couldn’t remember if I’d ever seen the second. Now I have.

Now a successful lawyer, Elle finds out that her cute little dog’s mother is being used by a cosmetics company for animal testing. By a corporation her own company represents no less.

Time to quit being a lawyer and head off to Washington for a new adventure amongst a new type of similarly prejudiced Very Serious People in the hope of convincing a sympathetic Congresswoman to get an anti-animal testing bill through the system.

🎥 Watched Legally Blonde.

This was the year rewatched the very famous, very popular, feminist comedy film about a pink-obsessed very feminine blonde young lady who takes herself off on a life-changing journey in a somewhat misguided attempt to preserve things as they are. Eventually she realises she’s worth so much more than a trophy wife to an idiot man living a 1950s lifestyle - she’s more than just a pretty face - and, hey, we all learn some sanitised but important bits and pieces about the necessity of feminism along the way.

Not Barbie, that was earlier this year. Instead, 2001’s Legally Blonde. A classic from my formative years. One of the rare films I deigned to watch more than once per lifetime, and might even spring for tickets to the musical in the future if the opportunity should arise.

Our hero is Elle Woods, a young lady who’s into beauty, hair, nails, hanging out with her girlfriends, the colour pink, small dogs and anything else that you could popularly code as “feminine” back in the year 2001.

Her privileged and wealthy boyfriend dumps her. He still likes her enough. but really she’s just a fun time, a bimbo, a silly little girl. Certainly not someone who he can imagine as the serious person that the serious politician he has an ambition should be married to. And you know, male careers come first.

She does not enjoy this decision. Rather than smash up his expensive car though, she decides to follow him to the prestigious only-brainiacs-need-apply Harvard Law School in order to study law and prove she’s a worthwhile and serious person really.

This might be more than a little wince-inducing as the defining motivation for the character - but do remember we’re talking about early 2000s Hollywood-sanitised pop-feminist takes here. Barbie wasn’t perfect and that was more than twenty years later (it was great though). Besides, it’s an important part of the setup. But yep, the film is necessarily something of a product of its times. Don’t expect intersectionality et al. - it’s all pretty young White Heterosexual Lady trying to win her man back stuff. At least at first glance. The feminist focus here is instead more explicitly on the late 90s style priorities of smashing various glass ceilings, girl-bossing it, becoming respected male-dominated places of learning and work. Of changing the world by yourself, not having to be content with hanging off the arm of someone else who does.

Which, spoiler alert, of course she does. But perhaps the most empowering point it makes is that she can do so without changing herself, without donning a mask - refusing to conform to what 1990s (and yes, 2024 for plenty) saw as the image of a fancy lawyer who happens to be female.

Here we see that yes, it is possible to be a young lady who enthusiastically likes “feminine” things and has “feminine” interests and still make waves in spheres of life beyond being pretty and dating eligible bachelors - not that there’s anything wrong or lesser about being interested in those things as well I’m sure Elle would be the first to tell you.

From the New Statesman review:

If its message – that a woman can be smart and enjoy lip gloss simultaneously – does not feel especially radical now, it is worth noting that a generation of millennial women first enjoyed it at the age of ten or 12, when such an idea could be experienced as a revelation.

Thanks to Wikipedia I also learned that the film is based on a novel of the same name by Amanda Brown based on her own experiences of going to Stanford Law School with the same kind of innate preferences for fashion, beauty and the like as our fictional protagonist here.

There’s also a new prequal spinoff TV series called Elle supposedly coming soon, showing her high school lifestyle. But given the second filmic sequel, Legally Blonde 3, was supposed to be released in 2020 and there’s no sign of it yet it’s probably best not to hold one’s breath.

Windows has a keyboard shortcut for opening LinkedIn for some reason

New contender for the least useful Windows keyboard shortcut in existence: Windows key + Ctrl + Alt + Shift + L.

It’s a shortcut to everyone’s favourite thing to do on a computer: visit LinkedIn.

“But why?” you might justifiably ask.

It turns out it’s a mix of two things. Firstly that Microsoft previously tried to make a special new key on keyboards - the Office Key - happen. As far as I know it ended up happening substantially less than even “fetch” did. I’ve never seen one IRL anyway.

As a sidenote: the smiley face key to the right in the above photo from Microsoft was another new key that as far as I know failed to take off - the emoji key. That one I could see a use for in 2024 business settings, particularly if you’re an enthusiastic marketeer. If you don’t have that key on whatever you’re currently sitting in front of, then Windows key + . (i.e. the full-stop key) does the same thing, open the Windows emoji picker.

Anyway, back to the Office Key. Any keyboard shortcuts that would have used the Office key can now be used by the rest of us via Windows + Ctrl + Alt + Shift + whatever. And LinkedIn was one, it being famously the case that the average office worker trawls that site once per hour desperately looking for a new job to escape from their current deskbound prison to.

And secondly that Microsoft bought LinkedIn back in 2016 for $26 billion with the aim to “integrate it with Microsoft’s enterprise software, such as Office 365”. Well, they did successfully integrate it with a key that almost no-one has access to I guess.

The first point means that actually there’s arguably even less useful keyboard shortcuts available if I’m honest. Office Key/ Windows + Ctrl + Alt + Shift + Y for instance opens “yammer.com” which seems to be a kind of Facebook for companies I’ve never heard of called “Viva Engage”. I must admit to visiting LinkedIn more often than Yammer (total for the latter before today: zero times), although I’m not sure it’s been any more productive.

I could see Windows + Ctrl + Alt + Shift + X for “Load Excel” being a bit more useful for me, although by the time I manage to get my hand into the correct shape to reliably hit that combo I probably could have opened it 5 other ways.

The US Food and Drug Administration approved new vaccines for Covid-19 last week. The recommendation from the CDC is that basically everyone goes avail themselves of them, aside from anyone with any specific health conditions that preclude it one assumes.

These vaccines are a lot more effective than the previous set against the latest strains of Covid that are circulating around. These newer variants are causing something of a new wave of Covid cases, albeit one substantially less harmful to most people to what we saw in the early days of the pandemic. But it’s not less harmful to everyone; people are still dying of it, and that’s before we get onto the horror-show of life-altering risks that Long Covid can entail.

Personally I could wish I’d have had one of vaccines a few weeks ago, having gotten quite ill recently - but without travelling through time and space it wasn’t really an option.

Ibrahim Al-Nasser has officially set a new world record for how many video games consoles anyone has ever managed to connect to a TV at the same time. A critical stage in human development of course.

He’s bought enough cables and adaptors to plug in an astonishing 444 “completely different” gaming machines - everything from 1972’s Magnavox Odyssey to the the latest 2023 incarnation of the Playstation 5.

Apparently the Sega Genesis (aka Mega Drive to my fellow country-folk) is his favourite one.