The left is missing out on AI: ‘…ceding debate about a threat and opportunity to the right.’

Recently I read:

In 1984, An Unemployed Ice Cream Truck Driver Memorized A Game Show’s Secret Winning Formula. He Then Went On The Show: …and became rich, for a while.

Where are all the ‘Don’t tread on me’ Americans?: ‘If you oppose tyranny, the people of Minneapolis are your brothers and sisters in arms, regardless of what you think about immigration.’

The NYT and WaPo knew the US was going to abduct President Maduro in advance

It was interesting to read that (at least) the New York Times and the Washington Post learned about the covert US military mission to abduct the President of Venezula sometime before it actually happened - not just after Trump tweeted some meandering string full of capital letters about the subject like the rest of us did. . They chose not to publish anything on it though, apparently to avoid putting the US troops involved in more danger than they otherwise would have been.

The decisions in the New York and Washington newsrooms to maintain official secrecy is in keeping with longstanding American journalistic traditions — even at a moment of unprecedented mutual hostility between the American president and a legacy media that continues to dominate national security reporting.

I imagine that was not an entirely trivial decision to make given the current environment in the US and beyond. The mission in question was after all, for all its potential upsides and downsides, very likely an internationally and nationally illegal act that hadn’t yet taken place, being planned and actioned in the absence of any sign of democratic oversight.

It was also interesting for me to realise that there is no official mechanism for the US government to ask the press to stop reporting on whatever the highly sensitive topic of the day is. It sounds like the system simply relies on the media and the government coming to a mutual agreement.

Over here in the UK it is a bit different - we have, for example, the infamous “D-Notices”, or DSMA-Notices as they have now apparently been rebranded to. Our government can issue these to request the media not publish stories that they think will endanger national security . Wikipedia has a short list of a few times we know that these have been issued.

D-Notices aren’t actually legally enforceable, although they are typically adhered to. Beyond that though, we have seen the UK government take out injunctions - or even “super injunctions” - which do legally prevent information being shared. That was how the government covered up the catastrophic data leak which revealed the personal details of the thousands of Afghans who secretly helped the UK’s armed forces for a couple of years.

📺 Watched Return To Paradise seasons 1 and 2.

Yet another Death In Paradise spinoff. This time it’s set in Australia and the weird/genius/awkward/reluctant detective who is always just about to quit her job is a lady, Mackenzie Clarke. She returns to her homeland for a break after being suspended from her job the police force over here in London. But will she get a break as such? Well, obviously not.

The format and storylines are basically exactly the same as the original and all the other spinoffs - some variant of “cozy murder” - so you might as well watch this one if you liked those. I did.

Yet more of the apparent hypocrisy:

November 28 2025: Trump issues a full pardon to ex-Honduran president Hernandez who was in a US jail based on charges around drug trafficking and weapons.

January 4 2026: Trump authorises the kidnapping of Venezuelan president Mandura and intends to try him in US courts on charges around drug trafficking and weapons.

Weird date coincidence (I assume):

January 3 1990: US captures Panamas’s ruler Noriega on foreign soil in a probably illegal military operation.

January 3 2026: US captures Venezuela’s president Maduro on foreign soil in a probably illegal military operation.

The US bombs Venezuela, kidnapping its president

Trump authorises his military to bomb Venezuela and kidnap its president, Maduro, which they have successfully done.

Trump now thinks he’s going to run Venezuela, which will include seizing its oil industry, presumably so that the mega rich US oil companies can become even richer.

Apparently gone are the days where the US used proxy wars and secret funding to depose Latin American governments it disliked. Now they show no shame in directly doing it themselves and then tweeting about it.

Gone are the days when their government at least pretended at the time that their foreign military incursions were not actually mostly about seizing their opponents natural resources.

Just two weeks ago, Trump mentioned oil as a justification for his military buildup off Venezuela’s coast.

They took our oil rights, removed our companies, and we want them back," he told reporters on the Joint Base Andrews tarmac beside Air Force One.

Trump has, for years, expressed his belief that the United States had the right to confiscate oil using the military

Maduro was a bad man, a horrible president. No one needs to venerate him as anything other than that.

Maduro was widely considered to be leading an authoritarian government characterized by electoral fraud, human rights abuses, corruption, and severe economic hardship

But one can’t just invade other countries and abduct people you don’t like. The US operation was almost certainly illegal under international law, although I have seen many of the relevant organisations look like they’re going to do anything about it so far. Given the US can veto any relevant UN decision there’s little likelihood of much happening there.

It is perhaps less mind-blowingly unprecedented than it seems. The US did something vaguely similar in Panama, at least to my recent reading, back in 1989.

The United States invaded Panama in mid-December 1989 during the presidency of George H. W. Bush. The purpose of the invasion was to depose the de facto ruler of Panama, General Manuel Noriega, who was wanted by U.S. authorities for racketeering and drug trafficking. The operation, codenamed Operation Just Cause, concluded in late January 1990 with the surrender of Noriega

Although in that case it seems like they were rather more provoked rather than it being seemingly the whim of a corrupt, criminal and at times seemingly mad, US president.

Following the declaration of a state of war between Panama and the United States passed by the Panamanian general assembly, as well as the lethal shooting of a Colombia-born U.S. Marineofficer Lt. Robert Paz at a PDF roadblock, Bush authorized the execution of the Panama invasion plan.

Nontheless, Bush’s operation was condemned as illegal by much of the global community.

The U.S. government invoked as a legal justification for the invasion. Several scholars and observers have opined that the invasion was illegal under international law, arguing that the government’s justifications were, according to these sources, factually groundless, and moreover, even if they had been true they would have provided inadequate support for the invasion under international law

So there’s little doubt that Trump’s actions were, once again, not in line with the law. The question is, can and will anyone with power do anything about it, or is this the new norm for the country formerly known as a kind of global policeman?

Reform's defence of your right to tweet 'controversial' opinions only extends to their ideological friends

On the one hand, Reform UK heavily promote and feature Lucy Connolly at their annual conference - a lady who was arrested and plead guilty to stirring up racial hatred via her offensive tweets.

But they’re only this kind of “free speech advocates” when it suits them. As soon as its not someone whose views agree with at the vibe of the Reform higher-ups it’s a totally different story.

Regarding Abd el-Fattah, who has also been found to have produced some very offensive tweets, which he has since apologised for- well, in that case, he wants to go beyond merely arresting him, instead desiring to remove his British citizenship and deport him. Even though there would seem to be no legal basis for doing so whatsoever:

The Conservatives and Reform UK have both suggested the activist should be deported from the UK for the posts and have his British citizenship revoked, even though the law does not appear to provide grounds for either action. Nigel Farage has promoted a petition for people to sign in favour of deporting Abd el-Fattah to Egypt.

It’s yet another example of Reform and some of their ideological allies' hypocritically switching their views on some of the fundamental tenets of British society - law and order - depending on whether they like the person concerned.

Books I read in 2025

Here are the books I finished reading in 2025.

Tech bros seem obsessed with Lord of The Rings. Perhaps they should read it.

Innumerable start up ventures from the often morality-free seeming tech bros that control most of our digital lives seem to be named after Lord of The Rings stuff. Business Insider gives us a few examples:

- Erebor - a bank

- Anduril - defense tech

- Palantir - how to describe? BI says “a government-focused software giant”.

- Mithril Capital - an investment firm

- Durin - mining

- Rivendell One LLC - a trust that manages Peter Thiel’s shares.

- Lembas LLC - an investment firm

- Valar Ventures - a venture captial firm

- Sauron Systems - a home security system

But often in doing so, some of these pretention and shallow thinkers betray their actual ignorance of the book, or, if it’s not that, well, it’s a bad sign for other reasons.

The latest one that crossed my radar was Sauron Systems.

They’re trying to build:

…what they envisioned as a military-grade home security system for tech elites.

…a system combining AI-driven intelligence, advanced sensors like LiDAR and thermal imaging, and 24/7 human monitoring by former military and law enforcement personnel.

Like all good tech start up products, apparently it doesn’t actually exist yet other than as something investors can throw money at.

It is also named after the famously evil baddie from the Lord of the Rings trilogy and elsewhere in Tolkien’s literary world.

So who or what exactly is Sauron? According to Wikipedia:

Tolkien stated in his Letters that although he did not think “Absolute Evil” could exist as it would be “Zero”, “Sauron represents as near an approach to the wholly evil will as is possible.”

He explained that, like “all tyrants”, Sauron had started out with good intentions but was corrupted by power. Tolkien added that Sauron “went further than human tyrants in pride and the lust for domination”,

Bold, and I suppose potentially honest, of a surveillance company to represent itself as an entity well known for it’s evil-doing.

Some might say that “started out with good intentions but was corrupted by power” and “went further than human tyrants in pride and the lust for domination” isn’t a particularly terrible description of a few of Silicon Valley’s wannabe digital empires.

The British anti-immigrant hostility threatens our health service

Yet another way in which the often appalling, often racist, anti-immigrant sentiment being successfully whipped up in Britain by various politicians and media is making our country a weaker, worse place to live in for even its ‘native’ citizens.

The health service is being put at risk because overseas health professionals increasingly see the UK as an “unwelcoming, racist” country, in part because of the government’s tough approach to immigration, Jeanette Dickson said.

Record numbers of foreign-born doctors are quitting the NHS and the post-Brexit surge in those coming to work in it has stalled. At the same time, the number of nurses and midwives joining the NHS has fallen sharply over the past year.

Our health system is already seemingly in a desperate condition. Without the migrants that come to our country and generously bestow their skills on us for the good of the entire British population it can only ever move further towards being totally doomed. For which we will all tremendously suffer.

Foreign-born doctors and nurses were being put off by antagonism by politicians towards migrants, media coverage of immigration, the racist abuse of international medical graduates by NHS colleagues and racist aggression by patients toward minority ethnic NHS staff, she said.

Professor Langdon returns in Dan Brown's gripping 'The Secret of Secrets' book

📚 Finished reading: The Secret of Secrets by Dan Brown.

This is Dan Brown’s sixth book in the Professor Langdon series. This time he’s got a girlfriend - Professor of Noetics Katherine Solomon. She’s about to publish a book that not even Langdon is allowed to know the details of its contents, other than that it’s something fairly revelatory about consciousness.

Unsurprisingly, it doesn’t go all that smoothly. Powerful people don’t want the book to get to print. And they’ll go to even greater lengths than Meta did to stop it.

As ever, Brown’s book feels well-researched. Some of the studies it mentions and the descriptions of the few notable locations I’m aware of ring true, even if the events themselves are a little credulity-stretching at times. But hey, who wants to read about a load of boring normal stuff. And the Institute of Noetics is a real organisation. What is there is engaging and fast paced.

As to the topic of Katherine’s book, well, who knows. But I did come away with the curious feeling that, despite being fictional, this book presented arguments for a “non-conventional reality”, let’s say, as convincing as some non-fiction books on the topic, albeit a lot more abbreviated.

I know people love to hate the author, Dan Brown. I have no idea how to judge his literary style. But nor do I really care when I do know that I really enjoy basically every one of his books.

Reform UK is mostly funded by very rich people with foreign interests- cui bono?

For all their plastic patriotism, Reform sure do get financed by a lot of people with extensive foreign interests.

About 66% of all the money donated to Reform during this parliament came from donors who are resident overseas or with offshore interests overseas.

For all their man of the poor beleaguered common people shtick, they sure do get heavily financed by a few extraordinarily wealthy donors.

New research from Democracy for Sale shows that three-quarters of all donations to Reform have come from just three men: Christopher Harborne, Jeremy Hosking and Richard Tice

Not to get all conspiracy theoryish over this - I’ll leave that to some of their more deluded candidates - but it is surely of note that all these mega-rich folk with foreign interests - the very people that Reform at times pretend are the enemies of the people that only they can defend us against - are so enthusiastic for Reform to win.

Follow the money, cui bono, and all that jazz.

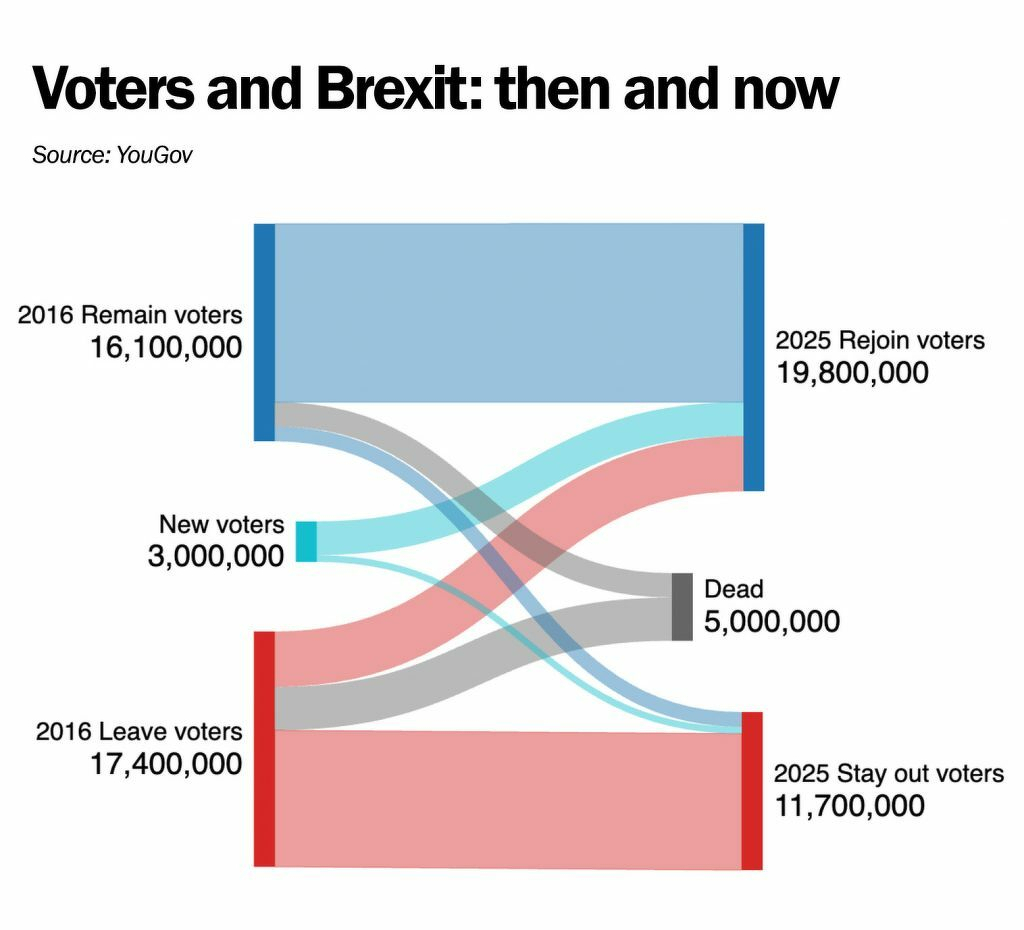

Demographic and attitude shifts suggest that if the Brexit referendum was held today, the pro-Remain side might win by around 8 million.

Peter Kellner uses Yougov data to estimate how many British people might vote or against Brexit if the referendum happened again today. He comes out with a figure suggesting a rather anti-Brexit verdict today:

…the combined impact of demographics and changed minds is to convert a 1.3 million majority for leaving the EU into an 8.1 million majority for rejoining it.

Something like this can only be a rough estimate that is riddled with assumptions. But, if nothing else, it reminds us that, as with every election, whatever the result was in the past, it might not remain the same in the future. Things change. People change. Priorities change. That is after all why we have governmental elections every few years!

There are several dynamics at play here.

Firstly, older people were more likely to vote at all, and more likely to vote for Brexit. They are also, sadly, more likely to have died since the original referendum in 2016.

Secondly, some people who were too young to vote in 2016 are now old enough to vote. And the youngest cohort of voters poll as very pro rejoining the EU.

As Kellner, somewhat harshly, puts it:

We are told that it would be undemocratic to overturn the 2016 referendum result. After almost ten years, that requires a belief that the votes of the dead count for more than the views of the young.

Thirdly, some people who did vote in the previous referendum and are still alive to vote today have changed their minds. Changed their minds in either direction of course, but Yougov polling suggests that shifting from pro-Brexit to anti-Brexit is rather more prevalent than the reverse; probably no surprise after the general catastrophe it turned out to be.

8% of those who voted Remain would now vote to stay out, while 29% of Leave voters want to rejoin.

All in all, the estimates of the volumes involved in this dynamics can be visualised, as he does for his New World article, like this:

An NBER paper estimates that Brexit caused a massive cost to the UK's economy, employment and productivity

The National Bureau of Economic Research recently released a working paper looking at The Economic Impact of Brexit on the UK. They set out to use various simulations and estimation techniques as to estimate what would have happened had Brexit never happened.

It doesn’t make for pleasant reading and undoubtedly helps explain some of the current mess that our country appears to be in. Whilst I haven’t been through the whole thing in detail as yet, in the abstract we learn that:

These estimates suggest that by 2025, Brexit had reduced UK GDP by 6% to 8%, with the impact accumulating gradually over time. We estimate that investment was reduced by between 12% and 18%, employment by 3% to 4% and productivity by 3% to 4%. These large negative impacts reflect a combination of elevated uncertainty, reduced demand, diverted management time, and increased misallocation of resources from a protracted Brexit process.

Back in the days of the referendum, the folk who raised concerns and produced analysis suggesting that there would likely be some adverse economic impact from disassociating ourselves from our nearest trading partner et al were often accused by the more rabid Brexiteers as creating a “project fear”, i.e. making fake doom-laden predictions just to scare the population from not voting exactly as the likes of Farage, Johnson et al wanted them to.

It turns out that some of the forecasts were in fact wrong in the longer term. But wrong in the other direction; underestimating the damage that would be done to the UK economy.

Comparing these with contemporary forecasts…shows that these forecasts were accurate over a 5-year horizon, but they underestimated the impact over a decade.

Gardner's 'Time for change' report calls for a positive vision of immigration in the UK, demanding policies that will benefit us all

Often I feel that those of us who dislike the continuous and unpatriotic efforts of various politicians, media and others to illegitimately demonise immigrants in order to mask the real source of the country’s poor know what we hate - we know abject immorality and counter-productive policies of hostility when we see them - but not so much what the concrete positive policy for the future should exactly be.

The report “Time for change: The evidence-based policies that can actually fix the immigration system”, from Zoe Gardner, presents 9 key recommendations. The full thing should perhaps be compulsory reading for anyone who is trying to form an opinion on the matter.

Below are the 9 key reforms the report demands:

- Safe routes

- The right to work and faster, better asylum decisions

- A not-for-profit asylum accommodation system

- Reform labour inspection and protections from workplace exploitation

- Scrap restrictive employer-sponsored visas

- Integrate asylum seekers into the points-based system

- A simplified, universal pathway to settlement after five years

- Reintroduce birthright citizenship and reduce integration barriers for children

- Embrace a positive narrative about immigration, diversity and belonging

The “why?” and “what about?” side of things is argued at length in the full report of course.

Something else the report brings up that I hadn’t thought of in a while is how the UK (and other countries) reacted to Ukrainian folk who wanted to flee Putin’s violence. We did not see nearly the negative frenzy surrounding the relatively large numbers of people involved then than when the average small boat containing a few people from amongst the world’s least privileged imaginable lands on our shores. Nor do we see endless newspaper stories today about whichever the self-contradicting hot topic of the day is about Ukranians “relying on handouts” or “stealing our jobs”.

There are obvious reasons why this is the case. But it is further evidence that another way is possible; indeed another way is essential.

In the UK, people who receive certain types of benefits get a £10 Christmas bonus each year.

Whilst that’s a cute and, given the current state of things, desperately needed extra from a kind of state Santa, it’s of note that this policy has existed since 1972. And, incredibly, it’s always been a nominal £10 every year since then.

Hence the amount been absolutely ravaged by inflation. £10 in 1972 would be worth around £120 today. It’s probably about time the amount received was updated.

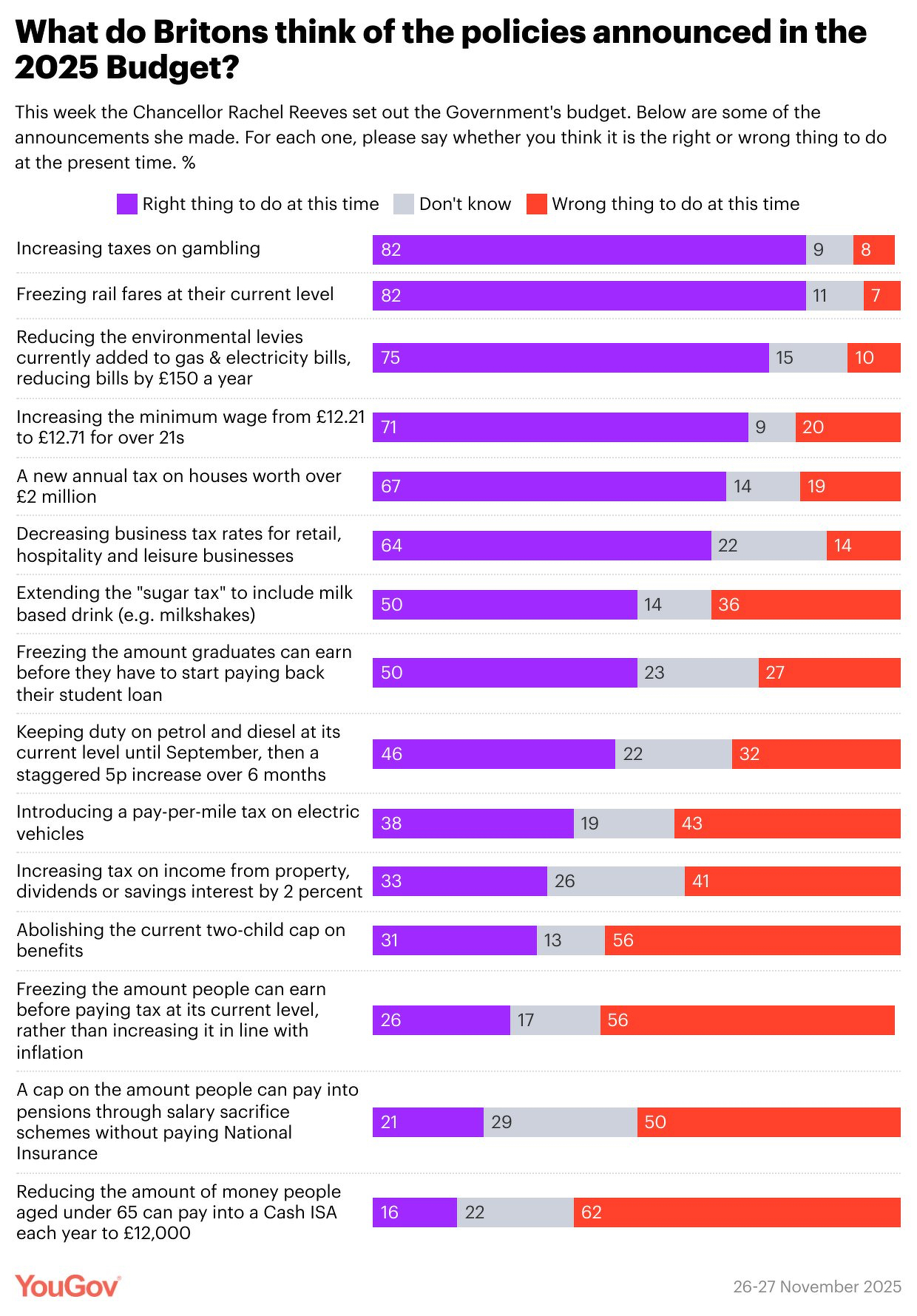

The public reaction to last week's UK budget.

After a lead up mired in chaos and leaks, the UK’s new budget dropped last week. At first glance it is substantially less terrible than I had feared.

Not everyone agrees of course, because not everyone agrees on anything any more. Yougov did some interesting polling on the public reaction to its individual components , shown below.

Probably the one I’m most confused / despondent about is the negative public reaction to the eradicating of the 2 child benefit limit.

A majority of Britons though this was a bad move. But how anyone could imagine this was the wrong thing to do given it was a policy that condemned hundreds of thousand of children to poverty whilst seemingly failing to achieve its self-declared aims whatsoever is beyond me.

Innocent children should not be punished no matter how poorly you believe their parents have behaved.

🎥 Watched Cruella.

The supposed origin story of everyone’s least favourite Dalmatian-murderer.

Watch as the troubled wannabe fashion designer Estella Miller lives out her unfortunately transformational life experiences. Not sure the story lines up 100% with the previously accepted nature of Cruella - but it was a surprisingly entertaining take on it all.

Not to be all Cruella, but really my only complaint really was with Estrella’s beloved dogs and their peers- I don’t know what kind of weird AI they animated them with but I wish they…hadn’t.

The 14 common features of fascism according to Umberto Eco

As summarised by Open Culture, Umberto Eco documented in his relatively famous essay what he sees as fourteen signs of fascist regimes in general, even whilst in the details fascism may manifest in a variety of different ways

…the fascist game can be played in many forms, and the name of the game does not change. … These features cannot be organized into a system; many of them contradict each other, and are also typical of other kinds of despotism or fanaticism. But it is enough that one of them be present to allow fascism to coagulate around it.

As so many, many people have observed before now, it goes without saying that some of these seem very relevant today.

- The cult of tradition. “One has only to look at the syllabus of every fascist movement to find the major traditionalist thinkers."

- The rejection of modernism. “The Enlightenment, the Age of Reason, is seen as the beginning of modern depravity.

- The cult of action for action’s sake. “Action being beautiful in itself, it must be taken before, or without, any previous reflection".

- Disagreement is treason.

- Fear of difference. “The first appeal of a fascist or prematurely fascist movement is an appeal against the intruders. Thus Ur-Fascism is racist by definition.”

- Appeal to social frustration. “One of the most typical features of the historical fascism was the appeal to a frustrated middle class, a class suffering from an economic crisis or feelings of political humiliation, and frightened by the pressure of lower social groups.”

- The obsession with a plot. “The followers must feel besieged.”

- The enemy is both strong and weak. “By a continuous shifting of rhetorical focus, the enemies are at the same time too strong and too weak.”

- Pacifism is trafficking with the enemy.

- Contempt for the weak.

- Everybody is educated to become a hero. With a resulting embrace of a cult of death - fascist heroes should fight to the death, and send other people to their death.

- Machismo and weaponry.

- Selective populism. “…the emotional response of a selected group of citizens can be presented and accepted as the Voice of the People.”

- Newspeak. “All the Nazi or Fascist schoolbooks made use of an impoverished vocabulary, and an elementary syntax, in order to limit the instruments for complex and critical reasoning.”

📚 Finished reading Deadline by Steph McGovern.

Deadline or Dead line? Either would make sense. Officially it seems to be the former.

This is a quick-to-read thriller telling the story of (fictional) TV presenter Rose who is in the midst of her career high - an exclusive interview with Britain’s Chancellor the Exchequer. When all of a sudden, in front of a viewership of million, the guidance of her studio team in her earpiece is replaced by something much more disturbing.

The author is herself a broadcaster - after various presenting jobs and more with the BBC she currently co-hosts the popular economics podcast The Rest Is Money. So she no doubt has some domain knowledge of what she writes. Hopefully thought the events of the book are not quite as simple as they seem to pull off.

📺 Watched You season 5.

In this, the final season of the show, our apparently charming psychopath Joe is back, enjoying a pretty normal family life, for the elite at least, with his rich and famous wife and child. Of course it doesn’t take too long until he convinces himself of the need to protect them from harm in rather disturbing ways. If you enjoyed the last few seasons I’m sure you’d enjoy this one too.

Have developed a recentish interest in the world of OSINT, this season caught my eye as both sides leveraging these sorts of techniques. It’s certainly not an educational program. More a reminder not to embrace the truly dark and evil side of the hobby.

Prem Sikka's progressive ideas for Labour's budget this week

Prem Sikka has put forward a few progressive-oriented ideas for the Chancellor to deliver in her much anticipated/dreaded budget due to drop in the UK this coming week.

The whole thing has, so far, been a real mess. And the way things are going, sadly I’m not so sure “progressive” is the vibe they’ll go for. But it’s nice that someone associated with Labour is thinking about these things. We can but hope.

To summarise:

- An end to the 2-child benefit cap.

- Abolishing VAT on domestic fuel.

- Increasing the tax free personal income tax allowance.

- Stopping the payment of interest on commercial banks' central bank reserves (I hadn’t actually realised this was a relatively new thing, and that other EU countries have already moved away from it)

- Borrow more to invest - which ends up being much cheaper than e.g. using Public Finance Initiatives.

- Increase the capital gains tax rates to the same rate that we already pay on wages, potentially even adding national insurance to the mix.

- Likewise tax dividends the same way as we do income, potentially adding NI too.

- You could even charge higher rates of tax on passive or wealth-based income - this has existed in the past called the “investment income surcharge”.

- Restrict the rate of tax relief on pension contributions to 20%.

- Make people paid as “partners” - e.g. at a law firm - pay national insurance, the same as the rest of us workers do.

- Reform council tax so that the very wealthy pay more. Apparently the council tax on a home worth £32 million is the same as that on one worth £320k today, which seems…suboptimal.

- Increase the take from corporation tax - perhaps by removing the exemptions and allowances that are abused as well as considering changing the rate.

Some of these might not be entirely as redistributive as I’d prefer. For example, increasing the personal income tax allowance may well benefit the at-least-moderately-rich more than the poorer folk. But the general idea behind the article seems to be to suggest a set of policies that do not necessarily break Labour’s (likely foolish) manifesto promises on not raising the major taxes, and do not hit the wallets of the poorer half of the population as such. He also suggests that aligning taxes on wealth with those on wages could also reduce tax avoidance.

Easily download all your completed Datacamp course materials for future offline usage

Datacamp is one of the many sites where you can sign up to learning various types of coding. This one mostly targeted at data analysts, scientists and engineers, teaching you R, Python, SQL and some statistics amongst other other stuff.

It is a mix of videos, slides and interactive exercises. You are easily able to download the slides as PDFs using the on-site feature, which is nice (at least as long as you’re paying for a subscription). But you can only do this 1 chapter at a time which is cumbersome when you’ve done more than a handful of courses.

Of course some clever folk have found a way to automate this. Many attempts seem to have originated from this code from TRoboto called “datacamp-downloader” which is supposed to let you download everything you could imagine related to the Datacamp courses you’ve completed - slides, videos, completed exercises and so on. For some reason, I personally couldn’t get this to work on many of my completed courses though.

But there are many forks of this base code! I’ve been using this one from vicky-dx which, at least for me, works a lot more reliably.

Assuming you have already got Python and git installed - install them first if not! - it’s just as simple as the onsite instructions indicate - even on a Windows machine!

Note that you may also need to install the Google Chrome web browser I think if, like me, you didn’t already have it installed on your computer. I’m not certain about that, but it appeared to help with my early efforts to get this working.

Anyway, once that’s all done, get yourself a command prompt in the folder above the one in which you’d like to install this software and:

git clone https://github.com/vicky-dx/datacamp-downloader.git

cd datacamp-downloader

pip install -e .

Then the best way (in my experience) to log on is as detailed on the site under option 1:

datacamp set-token [TOKEN]

where [TOKEN] is the value of the _dct cookie that datacamp provides you when you log in in a standard browser.

How to get that? It’s a bit of a faff, but not hard if you can follow instructions!

Per the project’s docs:

Firefox

Visit datacamp.com and log in. Open the Developer Tools (press Cmd + Opt + J on MacOS or F12 on Windows). Go to Storage tab, then Cookies > https://www.datacamp.com Find _dct key, its Value is the Datacamp authentication token.Chrome

Visit datacamp.com and log in. Open the Developer Tools (press Cmd + Opt + J on MacOS or F12 on Windows). Go to Application tab, then Storage > Cookies > https://www.datacamp.com Find _dct key, its Value is the Datacamp authentication token.

The token will be a very long string of random looking characters, so you’re best to paste it onto the end of the above command.

Then you have access to commands like:

datacamp courses

and

datacamp tracks

which let you list your completed courses and tracks respectively. Once you have run those you can then

datacamp download

either one, several or all of the tracks/courses by their ID number that the first 2 commands give you. There are many options for what exactly to download per course. Personally I was not interested in saving copies of the videos so I ran

datacamp download --no-videos --subtitles none 1

to download the first course. This gave me the exercise questions, solutions, video scripts, datasets and so on, but not the videos or the subtitles.

Chrome opens and logs in, the command prompt keeps you updated with what’s going on, and you can just sit back and wait for the relevant files to be downloaded to your computer for future storage and use. It’s a great time saver.

That’s also, specific to this fork, the opportunity to download “in progress” courses which might be handy if you want to use the files as reference material. For that, you can use:

datacamp ongoing # to list ongoing courses

datacamp download-ongoing [list of course IDs from the above] # to download

once you’re logged in.

Note that downloading can take a long time if you’ve completed many courses. My first download took several hours.

🎶 Listening to Dreams on Toast by The Darkness.

Another long-serving band releasing an album in 2025 with music that harks back to their signature glam-rock infused albums of yesteryear. You’d recognise this band anywhere.

Apparently they wrote around 150 songs before settling on the 10 that actually ended up on the album, which sounds like a whole lot of work, but the end result was worth it.

🎶 Listening to Mayhem by Lady Gaga.

Gaga is back with her 6th solo album. Doesn’t time fly? I especially liked her music in the earlier “The Fame” days, so I was excited to find out that this one is to some extent a return to the form of her older days, gothicish disco funk et al, and so much the better for it.

Also includes the duet-with-Bruno-Mars song “Die with a Smile”, which is a bit cheesy and played everywhere, sure, but incredibly catchy.