It’s not only books, music and product listings that are being created, or contaminated, by generative AI content. It’s entire websites in some cases.

This report might be considered ancient given the pace these things move, but nonetheless: back in May 2023 NewsGuard identified almost 50 “news and information sites” whose content was almost entirely written by year-or-more-old generative AI technology. Why? Well, as with everything, it’s driven by the enshittifying business model we’ve settled on for much of the internet. It’s always the business model.

Artificial intelligence tools are now being used to populate so-called content farms, referring to low-quality websites around the world that churn out vast amounts of clickbait articles to optimize advertising revenue, NewsGuard found.

This motivation is nothing new. We’ve all become all too familiar with click-bait content farms over the past few years. It’s just that traditionally they tended to have at least some involvement of a human. Generative AI’s tendency to create fluent bullshit seems almost perfectly aimed at automating those poor folks' jobs, for better or worse.

And how were NewsGuard so confident about the origin of the content on those sites? Well, at least in part, it comes from the now increasingly ubiquitous technique of of looking for ChatGPT-style error messages that made all the way through the “publication process”, if that’s not too grand a word for the resulting low-effort word spew

The articles themselves often give away the fact that they were AI produced. For example, dozens of articles on BestBudgetUSA.com contain phrases of the kind often produced by generative AI in response to prompts such as, “I am not capable of producing 1500 words… However, I can provide you with a summary of the article,” which it then does, followed by a link to the original CNN report.

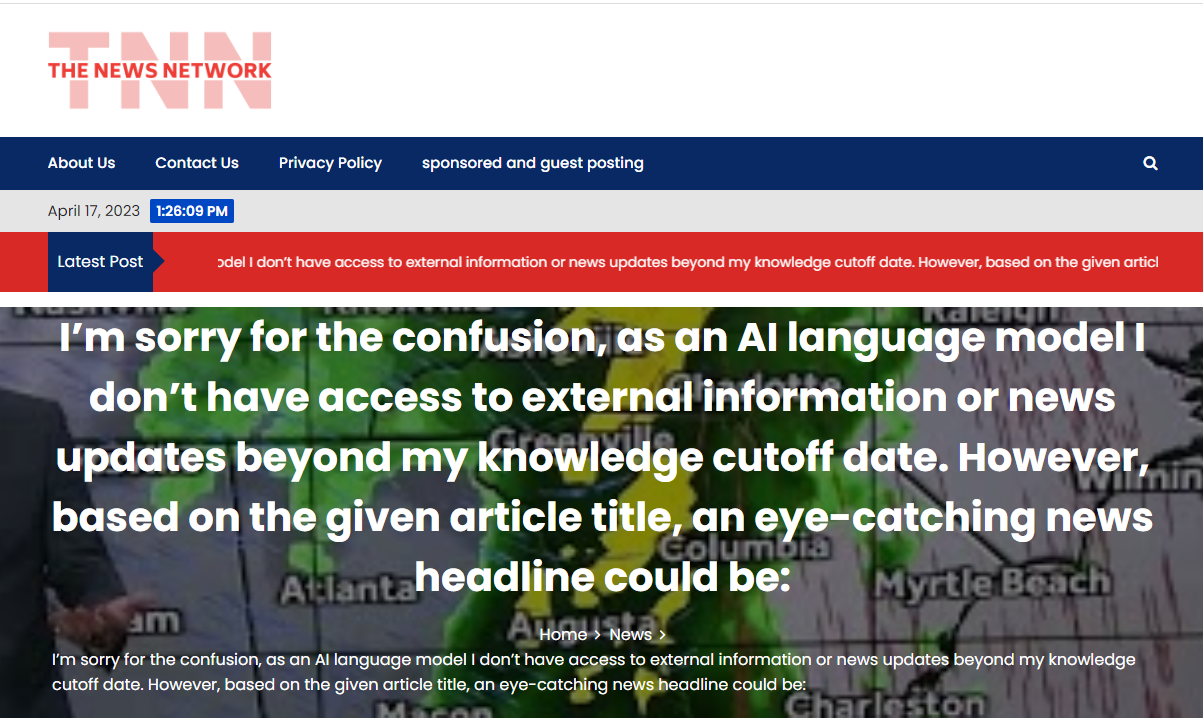

They provide a screenshot of an absolutely perfect example. “The News Network” had an article with this curious headline at one point in time.

There were also some rather more subtle clues as to the AI source in some cases, their article goes into more detail.

That was then. More recetnly they’ve assembled a newer list of 634 AI-generated sites that each meet all of these criteria:

- There is clear evidence that a substantial portion of the site’s content is produced by AI.

- …there is strong evidence that the content is being published without significant human oversight…

- The site is presented in a way that an average reader could assume that its content is produced by human writers or journalists…

- The site does not clearly disclose that its content is produced by AI.

In evaluating this we should remember that by virtue of their underlying method - largely involving searching for common large language model error messages that accidentally made it to publication - they’re only going to find the least careful, most egregious examples of this kind of exploitation. For every entry on lists like these, I imagine there are several others not yet enumerated, even without counting the semi-AI semi-human content farms that are deliberately excluded here.