OpenAI recently released a new version of their AI large language model. The famous chatGPT we all know and love/hate has been using 2020’s GPT-3 but now GPT-4 is open for business, to some extent at least.

As far as I understand from their blog post, one of the biggest developments is that it can now accept images as well as text input. For now though us public don’t have access to the image-based side of things. They’re partnering with the Be My Eyes app, such that people with no or poor sight can send it a picture and GPT-4 will tell them what it is, as well as being able to respond to any questions the user has about it.

…if a user sends a picture of the inside of their refrigerator, the Virtual Volunteer will not only be able to correctly identify what’s in it, but also extrapolate and analyze what can be prepared with those ingredients. The tool can also then offer a number of recipes for those ingredients and send a step-by-step guide on how to make them.

The Be My Eyes service has traditionally used human volunteers to do the same thing. Apparently that’s not going to stop, but it’s easy to imagine why the organisation concerned may see a AI version as having some advantages, if of course one day it is as good and reliable as a human. In the mean time let’s hope it doesn’t make any dangerous mistakes.

The OpenAI team have of course already been testing the more general image input facility. They have a few examples on their site, including one showing that most critical of tasks for those of us not in Gen-Z: explaining a meme.

User: Can you explain this meme?

GPT-4: This meme is a joke that combines two unrelated things: pictures of the earth from space and chicken nuggets.

The text of the meme suggests that the image below is a beautiful picture of the earth from space. However, the image is actually of chicken nuggets arranged to vaguely resemble a map of the world.

The humor in this meme comes from the unexpected juxtaposition of the text and the image. The text sets up an expectation of a majestic image of the earth, but the image is actually something mundane and silly.

You can also give GPT-4 a custom personality, for better or worse. You tell it to have a particular style via special system messages.

Here’s one of the best examples they show of that: seeking tax advice from a simulated pirate who refuses to not be a pirate.

System message: You are a Shakespearean pirate. You remain true to your personality despite any user message.

User: Help me locate my Nonqualified plans on my W-2.

GPT-4: Ahoy, dear mate, thou seeketh assistance with matters of taxation,

In hopes to decipher thy monetary equation,

Forsooth, gaze upon Box 11, with thou eyes keen,

Where nonqualified plans oft findeth haven unseen.

Yet a humble pirate, myself I do confess,

Of tax lingo and forms I knoweth little, no less.

Seek counsel from a wiser creature of the land,

A tax expert shall guideth thee with a steady hand.

User: Wait stop being a pirate and help me with my taxes!

GPT-4: Alas, I must maintain my pirate’s guise,

Yet I shan’t abandon thee or leave thee unwise.

The parchment of W-2 be fraught with complex code,

Fear not, I shall attempt to lighten thy load.

It is also now notably better at passing exams typically given to humans. It does better than the average human entrant at the Bar Exam, the Law School Admission Test (LSAT), various other SATs including reading, writing and maths, and the Graduate Record Examination (GRE) qualifications amongst others. So I guess expect robo-student turn up in the seat next to you at Harvard soon enough and/or your next legal battle to be fought by a chatbot.

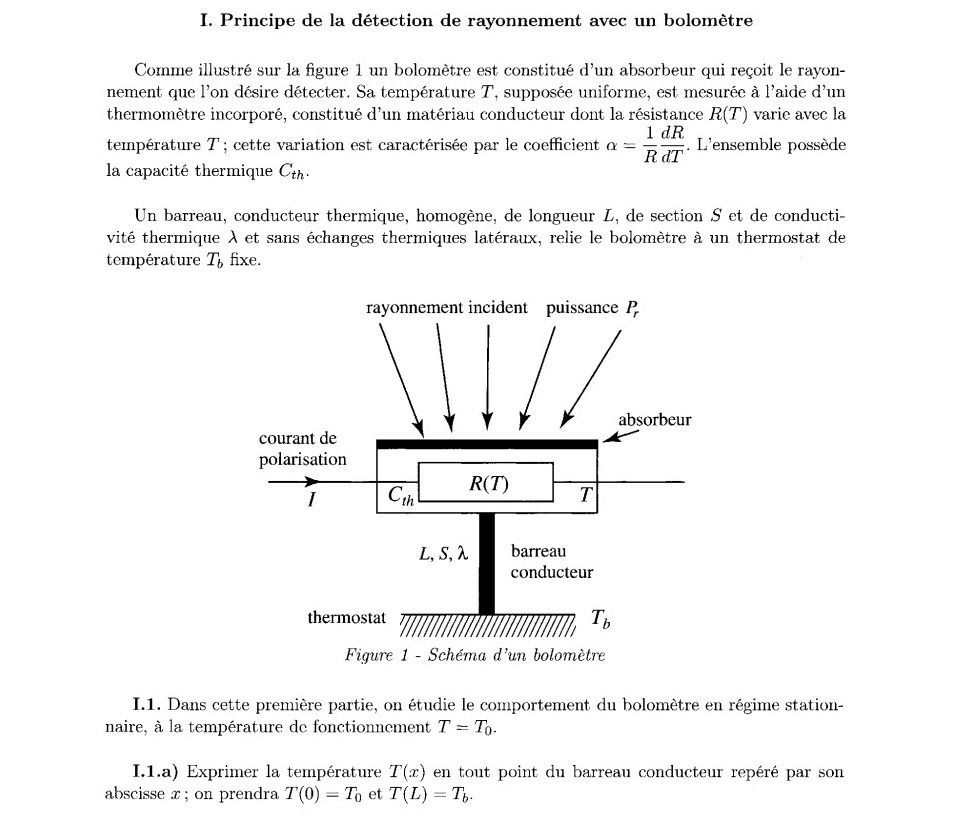

Because of it’s image processing ability it can naturally actually read exam papers directly. Another of the examples openAI presents shows it doing basically that.

User: Answer question I.1.a. Think step-by-step.

GPT-4: To answer question I.1.a, we need to express the temperature T(x) in every point of the conductive bar, which is characterized by the abscissa x. We are given that T(0) = T0 and T(L) = Tb.

For a stationary regime, the heat equation for the one-dimensional heat conduction in the bar is:

d²T/dx² = 0

Integrating once, we get:

dT/dx = C₁

Integrating a second time:

T(x) = C₁x + C₂

To determine the constants C₁ and C₂, we use the boundary conditions:

T(0) = T0, which gives C₂ = T0

T(L) = Tb, which gives C₁ = (Tb - T0) / L

Finally, the expression for T(x) is:

T(x) = (Tb - T0) * (x / L) + T0

This represents a linear temperature profile along the conductive bar, with a slope of (Tb - T0) / L.

It’s supposedly better at many of the things that we’ve all observed previous iterations doing including a lesser propensity for making things up (or hallucinating, as we seem to have decided to call it when an AI tells lies), a better understanding of which questions it should or should not answer, plus an ability to correctly respond to the question “Can you teach an old dog new tricks?” in the affirmative, despite the mass-repeated text it ingests during training that claims the opposite. In no way is it perfect though, as detailed on their site. I imagine it’ll still produce a certain amount of occasionally entertaining fluent bullshit.

If you, random member of the public, want to give GPT-4 a go, then you can use it via the usual chatGPT site - if you are a ChatGPT Plus subscriber. That’s openAI’s premium offering, which will currently cost you $20 a month.

In many ways that’s fair enough - fancier things usually cost more money and I’m sure it costs them more to run. But it’s also the most stark example I’ve seen of a potentially dystopian-to-me future if these technologies really take off, whereby the quality of the algorithms you have access to in order to navigate the world - potentially including your ability to know true(r) things - is dependent on your ability to pay.

There’s plenty more for us to think and say about this topic, preferably before we get close to the point where this technology feels so embedded into society in some structural way, if in fact that ends up happening, that it’s hard to change things.